Azure AI Foundry provides access to Claude, GPT-4, and Gemini models in a single platform. Multi-model orchestration allows you to leverage each model’s strengths, implement fallback strategies, and optimize for cost and performance by routing requests to the most appropriate model.

This guide covers patterns for building production systems that intelligently orchestrate multiple frontier models.

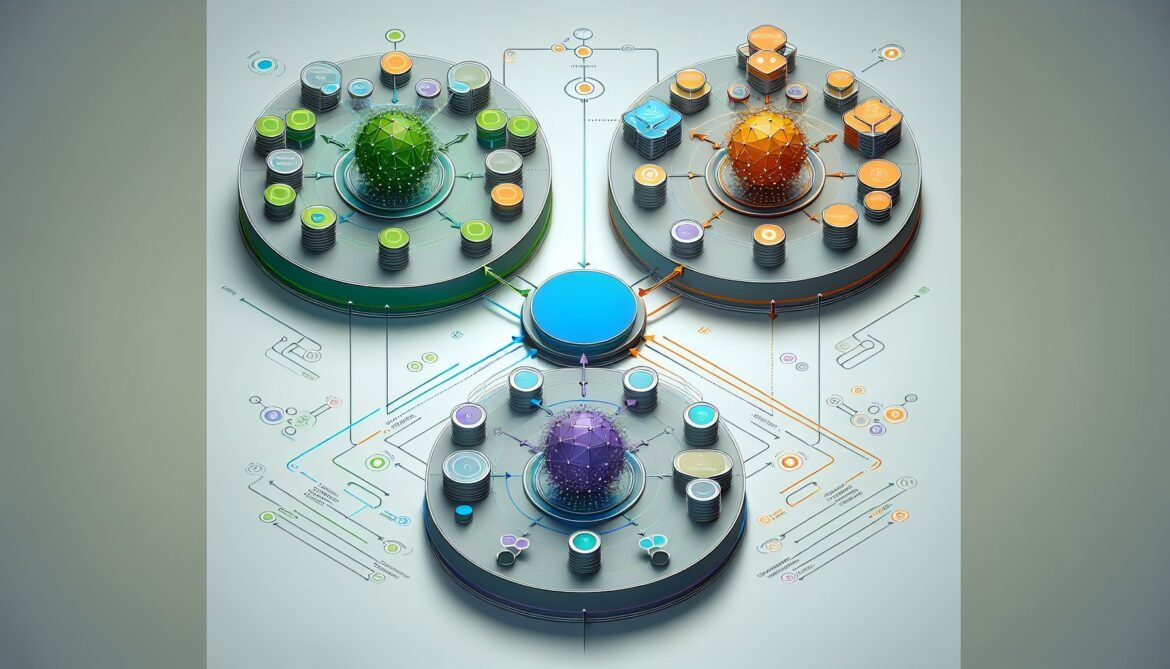

Multi-Model Architecture

flowchart TB

REQUEST[User Request]

ROUTER{Intelligent Router}

subgraph Models[Model Tier]

CLAUDE[Claude Sonnet 4.5]

GPT[GPT-4o]

GEMINI[Gemini Pro]

HAIKU[Claude Haiku 4.5]

end

AGGREGATE[Response Aggregator]

VALIDATE[Validation Layer]

RESPONSE[Final Response]

REQUEST --> ROUTER

ROUTER -->|Coding/Agents| CLAUDE

ROUTER -->|General/Creative| GPT

ROUTER -->|Multimodal| GEMINI

ROUTER -->|High Volume| HAIKU

CLAUDE & GPT & GEMINI & HAIKU --> AGGREGATE

AGGREGATE --> VALIDATE

VALIDATE --> RESPONSE

style REQUEST fill:#e3f2fd

style ROUTER fill:#fff3e0

style Models fill:#f3e5f5

style RESPONSE fill:#e8f5e9Implementation: Multi-Model Router

const { AnthropicFoundry } = require("@anthropic-ai/foundry-sdk");

const { AzureOpenAI } = require("openai");

class MultiModelOrchestrator {

constructor(config) {

this.claudeClient = new AnthropicFoundry({

foundryResource: config.foundryResource

});

this.gptClient = new AzureOpenAI({

endpoint: config.openaiEndpoint,

apiKey: config.openaiKey,

apiVersion: "2024-02-15-preview"

});

this.routingRules = this.initializeRoutingRules();

}

initializeRoutingRules() {

return {

coding: { primary: "claude-sonnet-4-5", fallback: "gpt-4o" },

creative: { primary: "gpt-4o", fallback: "claude-sonnet-4-5" },

analysis: { primary: "claude-sonnet-4-5", fallback: "gpt-4o" },

simple: { primary: "claude-haiku-4-5", fallback: "gpt-4o-mini" },

multimodal: { primary: "gpt-4o", fallback: "claude-sonnet-4-5" }

};

}

async processRequest(prompt, options = {}) {

const taskType = this.classifyTask(prompt);

const routing = this.routingRules[taskType];

try {

return await this.executeWithModel(

routing.primary,

prompt,

options

);

} catch (error) {

console.warn(`Primary model failed, using fallback`, error);

return await this.executeWithModel(

routing.fallback,

prompt,

options

);

}

}

async executeWithModel(model, prompt, options) {

if (model.startsWith("claude")) {

return await this.executeClaude(model, prompt, options);

} else {

return await this.executeGPT(model, prompt, options);

}

}

async executeClaude(model, prompt, options) {

const response = await this.claudeClient.messages.create({

model: model,

max_tokens: options.maxTokens || 4000,

thinking: options.enableThinking ? {

type: "enabled",

budget_tokens: options.thinkingBudget || 10000

} : undefined,

messages: [{

role: "user",

content: prompt

}]

});

return {

content: response.content[0].text,

model: model,

usage: response.usage

};

}

async executeGPT(model, prompt, options) {

const response = await this.gptClient.chat.completions.create({

model: model,

max_tokens: options.maxTokens || 4000,

messages: [{

role: "user",

content: prompt

}]

});

return {

content: response.choices[0].message.content,

model: model,

usage: response.usage

};

}

classifyTask(prompt) {

const lowerPrompt = prompt.toLowerCase();

if (lowerPrompt.match(/code|function|implement|debug|algorithm/)) {

return "coding";

}

if (lowerPrompt.match(/story|creative|poem|narrative/)) {

return "creative";

}

if (lowerPrompt.match(/analyze|evaluate|assess|compare/)) {

return "analysis";

}

if (prompt.length < 100) {

return "simple";

}

return "analysis";

}

}

// Usage

const orchestrator = new MultiModelOrchestrator(config);

const result = await orchestrator.processRequest(

"Implement a binary search tree in Python",

{ enableThinking: true, thinkingBudget: 20000 }

);

console.log(`Response from ${result.model}:`, result.content);C# Multi-Model Implementation

using Azure.AI.Foundry;

using Azure.AI.OpenAI;

using Azure.Identity;

public class MultiModelOrchestrator

{

private readonly AnthropicFoundryClient _claudeClient;

private readonly OpenAIClient _gptClient;

private readonly Dictionary<TaskType, ModelRoute> _routes;

public MultiModelOrchestrator(string foundryResource, string openaiEndpoint)

{

var credential = new DefaultAzureCredential();

_claudeClient = new AnthropicFoundryClient(foundryResource, credential);

_gptClient = new OpenAIClient(new Uri(openaiEndpoint), credential);

_routes = InitializeRoutes();

}

private Dictionary<TaskType, ModelRoute> InitializeRoutes()

{

return new Dictionary<TaskType, ModelRoute>

{

[TaskType.Coding] = new ModelRoute("claude-sonnet-4-5", "gpt-4o"),

[TaskType.Creative] = new ModelRoute("gpt-4o", "claude-sonnet-4-5"),

[TaskType.Analysis] = new ModelRoute("claude-sonnet-4-5", "gpt-4o"),

[TaskType.Simple] = new ModelRoute("claude-haiku-4-5", "gpt-4o-mini"),

[TaskType.Multimodal] = new ModelRoute("gpt-4o", "claude-sonnet-4-5")

};

}

public async Task<ModelResponse> ProcessRequestAsync(

string prompt,

RequestOptions options = null)

{

options ??= new RequestOptions();

var taskType = ClassifyTask(prompt);

var route = _routes[taskType];

try

{

return await ExecuteWithModelAsync(route.Primary, prompt, options);

}

catch (Exception ex)

{

Console.WriteLine($"Primary model failed: {ex.Message}");

return await ExecuteWithModelAsync(route.Fallback, prompt, options);

}

}

private async Task<ModelResponse> ExecuteWithModelAsync(

string model,

string prompt,

RequestOptions options)

{

if (model.StartsWith("claude"))

{

return await ExecuteClaudeAsync(model, prompt, options);

}

else

{

return await ExecuteGPTAsync(model, prompt, options);

}

}

private async Task<ModelResponse> ExecuteClaudeAsync(

string model,

string prompt,

RequestOptions options)

{

var response = await _claudeClient.CreateMessageAsync(

model: model,

maxTokens: options.MaxTokens,

thinking: options.EnableThinking

? new ThinkingConfig

{

Type = ThinkingType.Enabled,

BudgetTokens = options.ThinkingBudget

}

: null,

messages: new[]

{

new Message

{

Role = "user",

Content = prompt

}

});

return new ModelResponse

{

Content = response.Content[0].Text,

Model = model,

Usage = new UsageInfo

{

InputTokens = response.Usage.InputTokens,

OutputTokens = response.Usage.OutputTokens,

ThinkingTokens = response.Usage.ThinkingTokens ?? 0

}

};

}

private async Task<ModelResponse> ExecuteGPTAsync(

string model,

string prompt,

RequestOptions options)

{

var chatOptions = new ChatCompletionsOptions

{

DeploymentName = model,

MaxTokens = options.MaxTokens,

Messages =

{

new ChatRequestUserMessage(prompt)

}

};

var response = await _gptClient.GetChatCompletionsAsync(chatOptions);

var choice = response.Value.Choices[0];

return new ModelResponse

{

Content = choice.Message.Content,

Model = model,

Usage = new UsageInfo

{

InputTokens = response.Value.Usage.PromptTokens,

OutputTokens = response.Value.Usage.CompletionTokens

}

};

}

private TaskType ClassifyTask(string prompt)

{

var lower = prompt.ToLower();

if (Regex.IsMatch(lower, @"code|function|implement|debug|algorithm"))

return TaskType.Coding;

if (Regex.IsMatch(lower, @"story|creative|poem|narrative"))

return TaskType.Creative;

if (Regex.IsMatch(lower, @"analyze|evaluate|assess|compare"))

return TaskType.Analysis;

if (prompt.Length < 100)

return TaskType.Simple;

return TaskType.Analysis;

}

}

public enum TaskType

{

Coding,

Creative,

Analysis,

Simple,

Multimodal

}

public record ModelRoute(string Primary, string Fallback);

public record RequestOptions

{

public int MaxTokens { get; init; } = 4000;

public bool EnableThinking { get; init; }

public int ThinkingBudget { get; init; } = 10000;

}

public record ModelResponse

{

public string Content { get; init; }

public string Model { get; init; }

public UsageInfo Usage { get; init; }

}

public record UsageInfo

{

public int InputTokens { get; init; }

public int OutputTokens { get; init; }

public int ThinkingTokens { get; init; }

}Advanced Patterns

Consensus-Based Responses

For critical decisions, query multiple models and aggregate responses for higher confidence.

async def get_consensus_response(prompt, models=["claude-sonnet-4-5", "gpt-4o"]):

responses = await asyncio.gather(*[

query_model(model, prompt) for model in models

])

# Analyze agreement

consensus = analyze_consensus(responses)

if consensus.agreement > 0.8:

return consensus.agreed_response

else:

return {

"responses": responses,

"note": "Models disagree. Review multiple perspectives."

}Model Router Integration

Azure AI Foundry's Model Router automatically selects the best model based on query complexity, cost, and performance requirements.

Best Practices

- Use Claude for coding and agentic workflows

- Use GPT-4o for creative writing and general tasks

- Implement fallback strategies for reliability

- Monitor model performance and costs

- Use cheaper models (Haiku, GPT-4o-mini) for simple tasks

- Consider consensus for critical decisions

- Leverage Model Router for automatic selection

Conclusion

Multi-model orchestration in Azure AI Foundry enables you to leverage the best capabilities of Claude, GPT-4, and other models while optimizing for cost and performance. Intelligent routing, fallback strategies, and consensus mechanisms create robust, production-grade AI systems.