The AI landscape in 2026 has reached a critical inflection point. After years of impressive demos and proof-of-concepts, enterprises are finally moving beyond experimentation to deploy production-grade AI systems at scale. This shift represents more than just technological maturation. It signals a fundamental change in how organizations approach AI adoption, moving from individual productivity tools to comprehensive workflow orchestration that transforms entire business operations.

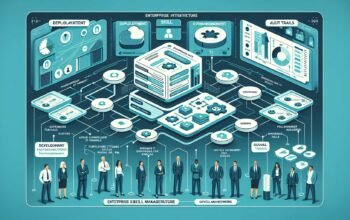

The challenge facing engineering teams is no longer whether AI can deliver value, but how to build reliable, repeatable infrastructure that enables rapid deployment of AI capabilities across the organization. The companies succeeding in this transition have adopted what industry leaders are calling the “AI factory” pattern, a systematic approach to AI development that mirrors the principles of modern software engineering.

The AI Factory Pattern: Infrastructure for Scale

The AI factory concept emerged from leading financial institutions that recognized a fundamental problem: building each AI application from scratch was too slow and too expensive. Banks like BBVA and JPMorgan Chase pioneered this approach starting in 2019, creating centralized platforms that combined technology infrastructure, standardized methods, reusable data pipelines, and previously developed algorithms.

What makes the AI factory pattern effective is its focus on reducing the time and complexity of deploying new AI capabilities. Instead of each team building custom infrastructure, the factory provides pre-configured environments, standard deployment pipelines, and reusable components that dramatically accelerate development cycles.

Core Components of an AI Factory

A production-ready AI factory consists of several interconnected components working together to enable rapid, reliable AI deployment:

Model Registry and Versioning: Centralized storage for trained models with comprehensive versioning, lineage tracking, and metadata management. This enables teams to understand model provenance, compare performance across versions, and roll back deployments when issues arise.

Data Pipeline Infrastructure: Standardized processes for data ingestion, transformation, and feature engineering. These pipelines ensure consistent data quality and make it easy to connect new data sources without rebuilding infrastructure.

Deployment Automation: Containerized deployment patterns with automated testing, monitoring, and rollback capabilities. This reduces deployment time from weeks to hours while maintaining reliability.

Observability and Monitoring: Comprehensive instrumentation for tracking model performance, data drift, and system health in production. This enables teams to detect and respond to issues before they impact users.

Building an AI Factory: Node.js Implementation

Here is a foundational implementation of an AI factory service in Node.js that demonstrates the core patterns:

// ai-factory-service.js

import { ModelRegistry } from './model-registry.js';

import { DataPipeline } from './data-pipeline.js';

import { DeploymentOrchestrator } from './deployment-orchestrator.js';

import { MonitoringService } from './monitoring-service.js';

export class AIFactoryService {

constructor(config) {

this.modelRegistry = new ModelRegistry(config.registryConfig);

this.dataPipeline = new DataPipeline(config.pipelineConfig);

this.deploymentOrchestrator = new DeploymentOrchestrator(config.deploymentConfig);

this.monitoring = new MonitoringService(config.monitoringConfig);

}

async deployModel(modelSpec) {

const deploymentId = this.generateDeploymentId();

try {

// Validate model exists in registry

const model = await this.modelRegistry.getModel(modelSpec.modelId, modelSpec.version);

if (!model) {

throw new Error(`Model ${modelSpec.modelId}:${modelSpec.version} not found in registry`);

}

// Prepare data pipeline

const pipeline = await this.dataPipeline.configure({

modelId: modelSpec.modelId,

dataSource: modelSpec.dataSource,

transformations: modelSpec.transformations

});

// Deploy with orchestrator

const deployment = await this.deploymentOrchestrator.deploy({

deploymentId,

model,

pipeline,

replicas: modelSpec.replicas || 3,

resourceLimits: modelSpec.resources

});

// Setup monitoring

await this.monitoring.setupModelMonitoring({

deploymentId,

modelId: modelSpec.modelId,

thresholds: modelSpec.performanceThresholds

});

return {

deploymentId,

status: 'deployed',

endpoint: deployment.endpoint,

metrics: deployment.initialMetrics

};

} catch (error) {

await this.monitoring.recordDeploymentFailure({

deploymentId,

error: error.message,

modelSpec

});

throw error;

}

}

async updateModel(deploymentId, newVersion) {

// Blue-green deployment pattern

const currentDeployment = await this.deploymentOrchestrator.getDeployment(deploymentId);

// Deploy new version alongside existing

const newDeployment = await this.deployModel({

...currentDeployment.spec,

version: newVersion,

deploymentId: `${deploymentId}-${newVersion}`

});

// Gradually shift traffic

await this.deploymentOrchestrator.gradualTrafficShift({

from: deploymentId,

to: newDeployment.deploymentId,

duration: '15m'

});

// Monitor for issues

const healthCheck = await this.monitoring.compareDeployments({

baseline: deploymentId,

candidate: newDeployment.deploymentId,

duration: '10m'

});

if (healthCheck.passed) {

await this.deploymentOrchestrator.completeShift(newDeployment.deploymentId);

await this.deploymentOrchestrator.teardown(deploymentId);

return { status: 'success', deploymentId: newDeployment.deploymentId };

} else {

await this.deploymentOrchestrator.rollback(deploymentId);

await this.deploymentOrchestrator.teardown(newDeployment.deploymentId);

throw new Error('Health check failed, rolled back to previous version');

}

}

generateDeploymentId() {

return `deploy-${Date.now()}-${Math.random().toString(36).substr(2, 9)}`;

}

}Python Implementation for ML-Heavy Workflows

Python remains the dominant language for machine learning workloads. Here is an equivalent implementation focusing on ML model management:

# ai_factory_service.py

from dataclasses import dataclass

from typing import Dict, Optional, List

import asyncio

from datetime import datetime

@dataclass

class ModelSpec:

model_id: str

version: str

data_source: str

transformations: List[Dict]

replicas: int = 3

resources: Optional[Dict] = None

performance_thresholds: Optional[Dict] = None

class AIFactoryService:

def __init__(self, config: Dict):

self.model_registry = ModelRegistry(config['registry_config'])

self.data_pipeline = DataPipeline(config['pipeline_config'])

self.deployment_orchestrator = DeploymentOrchestrator(config['deployment_config'])

self.monitoring = MonitoringService(config['monitoring_config'])

async def deploy_model(self, model_spec: ModelSpec) -> Dict:

deployment_id = self._generate_deployment_id()

try:

# Validate model in registry

model = await self.model_registry.get_model(

model_spec.model_id,

model_spec.version

)

if not model:

raise ValueError(

f"Model {model_spec.model_id}:{model_spec.version} not found"

)

# Configure data pipeline

pipeline = await self.data_pipeline.configure(

model_id=model_spec.model_id,

data_source=model_spec.data_source,

transformations=model_spec.transformations

)

# Deploy with orchestrator

deployment = await self.deployment_orchestrator.deploy(

deployment_id=deployment_id,

model=model,

pipeline=pipeline,

replicas=model_spec.replicas,

resource_limits=model_spec.resources

)

# Setup monitoring

await self.monitoring.setup_model_monitoring(

deployment_id=deployment_id,

model_id=model_spec.model_id,

thresholds=model_spec.performance_thresholds

)

return {

'deployment_id': deployment_id,

'status': 'deployed',

'endpoint': deployment.endpoint,

'metrics': deployment.initial_metrics

}

except Exception as error:

await self.monitoring.record_deployment_failure(

deployment_id=deployment_id,

error=str(error),

model_spec=model_spec

)

raise

async def update_model(self, deployment_id: str, new_version: str) -> Dict:

# Retrieve current deployment

current_deployment = await self.deployment_orchestrator.get_deployment(

deployment_id

)

# Deploy new version with blue-green pattern

new_deployment_id = f"{deployment_id}-{new_version}"

new_deployment = await self.deploy_model(

ModelSpec(

**{**current_deployment.spec, 'version': new_version},

deployment_id=new_deployment_id

)

)

# Gradual traffic shift

await self.deployment_orchestrator.gradual_traffic_shift(

from_deployment=deployment_id,

to_deployment=new_deployment['deployment_id'],

duration_minutes=15

)

# Monitor and validate

health_check = await self.monitoring.compare_deployments(

baseline=deployment_id,

candidate=new_deployment['deployment_id'],

duration_minutes=10

)

if health_check['passed']:

await self.deployment_orchestrator.complete_shift(

new_deployment['deployment_id']

)

await self.deployment_orchestrator.teardown(deployment_id)

return {

'status': 'success',

'deployment_id': new_deployment['deployment_id']

}

else:

await self.deployment_orchestrator.rollback(deployment_id)

await self.deployment_orchestrator.teardown(

new_deployment['deployment_id']

)

raise RuntimeError(

'Health check failed, rolled back to previous version'

)

def _generate_deployment_id(self) -> str:

timestamp = datetime.now().strftime('%Y%m%d%H%M%S')

import secrets

random_suffix = secrets.token_hex(4)

return f"deploy-{timestamp}-{random_suffix}"C# Implementation for Enterprise Integration

For organizations with .NET ecosystems, C# provides strong typing and excellent integration with Azure services:

// AIFactoryService.cs

using System;

using System.Threading.Tasks;

using System.Collections.Generic;

public class ModelSpec

{

public string ModelId { get; set; }

public string Version { get; set; }

public string DataSource { get; set; }

public List<Dictionary<string, object>> Transformations { get; set; }

public int Replicas { get; set; } = 3;

public Dictionary<string, object> Resources { get; set; }

public Dictionary<string, object> PerformanceThresholds { get; set; }

}

public class AIFactoryService

{

private readonly ModelRegistry _modelRegistry;

private readonly DataPipeline _dataPipeline;

private readonly DeploymentOrchestrator _deploymentOrchestrator;

private readonly MonitoringService _monitoring;

public AIFactoryService(FactoryConfiguration config)

{

_modelRegistry = new ModelRegistry(config.RegistryConfig);

_dataPipeline = new DataPipeline(config.PipelineConfig);

_deploymentOrchestrator = new DeploymentOrchestrator(config.DeploymentConfig);

_monitoring = new MonitoringService(config.MonitoringConfig);

}

public async Task<DeploymentResult> DeployModelAsync(ModelSpec modelSpec)

{

var deploymentId = GenerateDeploymentId();

try

{

// Validate model exists in registry

var model = await _modelRegistry.GetModelAsync(

modelSpec.ModelId,

modelSpec.Version

);

if (model == null)

{

throw new InvalidOperationException(

$"Model {modelSpec.ModelId}:{modelSpec.Version} not found in registry"

);

}

// Prepare data pipeline

var pipeline = await _dataPipeline.ConfigureAsync(new PipelineConfig

{

ModelId = modelSpec.ModelId,

DataSource = modelSpec.DataSource,

Transformations = modelSpec.Transformations

});

// Deploy with orchestrator

var deployment = await _deploymentOrchestrator.DeployAsync(new DeploymentConfig

{

DeploymentId = deploymentId,

Model = model,

Pipeline = pipeline,

Replicas = modelSpec.Replicas,

ResourceLimits = modelSpec.Resources

});

// Setup monitoring

await _monitoring.SetupModelMonitoringAsync(new MonitoringConfig

{

DeploymentId = deploymentId,

ModelId = modelSpec.ModelId,

Thresholds = modelSpec.PerformanceThresholds

});

return new DeploymentResult

{

DeploymentId = deploymentId,

Status = "deployed",

Endpoint = deployment.Endpoint,

Metrics = deployment.InitialMetrics

};

}

catch (Exception ex)

{

await _monitoring.RecordDeploymentFailureAsync(

deploymentId,

ex.Message,

modelSpec

);

throw;

}

}

public async Task<UpdateResult> UpdateModelAsync(string deploymentId, string newVersion)

{

// Blue-green deployment pattern

var currentDeployment = await _deploymentOrchestrator.GetDeploymentAsync(deploymentId);

// Deploy new version alongside existing

var newDeploymentId = $"{deploymentId}-{newVersion}";

var newDeployment = await DeployModelAsync(new ModelSpec

{

ModelId = currentDeployment.Spec.ModelId,

Version = newVersion,

DataSource = currentDeployment.Spec.DataSource,

Transformations = currentDeployment.Spec.Transformations,

Replicas = currentDeployment.Spec.Replicas,

Resources = currentDeployment.Spec.Resources

});

// Gradually shift traffic

await _deploymentOrchestrator.GradualTrafficShiftAsync(new TrafficShiftConfig

{

FromDeployment = deploymentId,

ToDeployment = newDeployment.DeploymentId,

DurationMinutes = 15

});

// Monitor for issues

var healthCheck = await _monitoring.CompareDeploymentsAsync(new ComparisonConfig

{

Baseline = deploymentId,

Candidate = newDeployment.DeploymentId,

DurationMinutes = 10

});

if (healthCheck.Passed)

{

await _deploymentOrchestrator.CompleteShiftAsync(newDeployment.DeploymentId);

await _deploymentOrchestrator.TeardownAsync(deploymentId);

return new UpdateResult

{

Status = "success",

DeploymentId = newDeployment.DeploymentId

};

}

else

{

await _deploymentOrchestrator.RollbackAsync(deploymentId);

await _deploymentOrchestrator.TeardownAsync(newDeployment.DeploymentId);

throw new InvalidOperationException(

"Health check failed, rolled back to previous version"

);

}

}

private string GenerateDeploymentId()

{

var timestamp = DateTimeOffset.UtcNow.ToUnixTimeSeconds();

var randomSuffix = Guid.NewGuid().ToString("N").Substring(0, 8);

return $"deploy-{timestamp}-{randomSuffix}";

}

}From Individual Tools to Workflow Orchestration

One of the most significant shifts in enterprise AI adoption is the move from treating AI as an individual productivity tool to embedding it into comprehensive workflow orchestration. This represents a fundamental change in how organizations think about AI value creation.

Early AI adoption focused on point solutions where individual contributors could use AI to enhance their personal productivity. Writing assistance, code completion, and research summarization exemplified this phase. While valuable, these applications left significant benefits on the table because they did not address the coordination costs that dominate knowledge work.

The next generation of enterprise AI systems coordinates entire workflows, connecting data across departments and moving projects from conception through completion. This requires architectural patterns that enable AI systems to maintain context across multiple steps, integrate with existing business systems, and coordinate actions across team boundaries.

Workflow Orchestration Architecture

Effective workflow orchestration requires several key architectural components working together:

State Management: Workflows span multiple steps that may execute over hours or days. The system must maintain consistent state across these steps, handling interruptions and failures gracefully.

Event-Driven Coordination: Rather than synchronous request-response patterns, workflow orchestration uses event-driven architecture where completing one step triggers the next. This enables better scalability and resilience.

Human-in-the-Loop Integration: Not every decision can or should be automated. Effective orchestration includes well-defined points where human judgment is required, capturing those decisions and incorporating them into the workflow.

Cross-System Integration: Real workflows span multiple systems. The orchestration layer must integrate with CRM, ERP, project management, and communication tools to create end-to-end automation.

Workflow Orchestration Implementation

Here is a practical implementation of a workflow orchestration engine that demonstrates these patterns:

// workflow-orchestrator.js

import { EventEmitter } from 'events';

export class WorkflowOrchestrator extends EventEmitter {

constructor(config) {

super();

this.stateStore = config.stateStore;

this.integrations = config.integrations;

this.aiService = config.aiService;

this.workflows = new Map();

}

registerWorkflow(workflowDefinition) {

this.workflows.set(workflowDefinition.id, workflowDefinition);

}

async executeWorkflow(workflowId, initialContext) {

const workflow = this.workflows.get(workflowId);

if (!workflow) {

throw new Error(`Workflow ${workflowId} not found`);

}

const executionId = this.generateExecutionId();

const state = {

executionId,

workflowId,

status: 'running',

currentStep: 0,

context: initialContext,

startedAt: new Date(),

steps: []

};

await this.stateStore.save(executionId, state);

this.emit('workflow:started', { executionId, workflowId });

try {

await this.executeSteps(workflow, state);

state.status = 'completed';

state.completedAt = new Date();

await this.stateStore.save(executionId, state);

this.emit('workflow:completed', { executionId, state });

return state;

} catch (error) {

state.status = 'failed';

state.error = error.message;

state.failedAt = new Date();

await this.stateStore.save(executionId, state);

this.emit('workflow:failed', { executionId, error });

throw error;

}

}

async executeSteps(workflow, state) {

for (let i = state.currentStep; i < workflow.steps.length; i++) {

const step = workflow.steps[i];

state.currentStep = i;

await this.stateStore.save(state.executionId, state);

this.emit('step:started', { executionId: state.executionId, step: step.id });

try {

const stepResult = await this.executeStep(step, state.context);

state.steps.push({

stepId: step.id,

status: 'completed',

result: stepResult,

completedAt: new Date()

});

// Merge step results into context

state.context = { ...state.context, ...stepResult };

await this.stateStore.save(state.executionId, state);

this.emit('step:completed', {

executionId: state.executionId,

step: step.id,

result: stepResult

});

} catch (error) {

state.steps.push({

stepId: step.id,

status: 'failed',

error: error.message,

failedAt: new Date()

});

if (step.onError === 'continue') {

continue;

} else {

throw error;

}

}

}

}

async executeStep(step, context) {

switch (step.type) {

case 'ai_analysis':

return await this.executeAIAnalysis(step, context);

case 'integration':

return await this.executeIntegration(step, context);

case 'human_review':

return await this.requestHumanReview(step, context);

case 'conditional':

return await this.executeConditional(step, context);

default:

throw new Error(`Unknown step type: ${step.type}`);

}

}

async executeAIAnalysis(step, context) {

const prompt = this.interpolateTemplate(step.prompt, context);

const response = await this.aiService.complete({

prompt,

model: step.model || 'default',

parameters: step.parameters || {}

});

return {

[step.outputKey]: response

};

}

async executeIntegration(step, context) {

const integration = this.integrations[step.integration];

if (!integration) {

throw new Error(`Integration ${step.integration} not configured`);

}

const params = this.interpolateObject(step.params, context);

const result = await integration[step.action](params);

return {

[step.outputKey]: result

};

}

async requestHumanReview(step, context) {

const reviewData = this.interpolateObject(step.reviewData, context);

return new Promise((resolve, reject) => {

const timeout = setTimeout(() => {

reject(new Error('Human review timeout'));

}, step.timeout || 86400000); // 24 hours default

this.emit('human:review_required', {

executionId: context.executionId,

step: step.id,

data: reviewData,

callback: (decision) => {

clearTimeout(timeout);

resolve({ [step.outputKey]: decision });

}

});

});

}

async executeConditional(step, context) {

const condition = this.evaluateCondition(step.condition, context);

if (condition) {

return await this.executeSteps({ steps: step.thenSteps }, context);

} else if (step.elseSteps) {

return await this.executeSteps({ steps: step.elseSteps }, context);

}

return {};

}

interpolateTemplate(template, context) {

return template.replace(/\{\{(\w+)\}\}/g, (match, key) => {

return context[key] || match;

});

}

interpolateObject(obj, context) {

const result = {};

for (const [key, value] of Object.entries(obj)) {

if (typeof value === 'string') {

result[key] = this.interpolateTemplate(value, context);

} else {

result[key] = value;

}

}

return result;

}

evaluateCondition(condition, context) {

// Simple condition evaluation

// In production, use a proper expression evaluator

const [left, operator, right] = condition.split(' ');

const leftValue = context[left];

const rightValue = right.startsWith('{{') ? context[right.slice(2, -2)] : right;

switch (operator) {

case '==': return leftValue == rightValue;

case '!=': return leftValue != rightValue;

case '>': return leftValue > rightValue;

case '<': return leftValue < rightValue;

default: throw new Error(`Unknown operator: ${operator}`);

}

}

generateExecutionId() {

return `exec-${Date.now()}-${Math.random().toString(36).substr(2, 9)}`;

}

}Repository Intelligence: Understanding Code Context

The explosion of software development activity in 2025 demonstrated both the promise and limitations of AI-assisted coding. GitHub reported 43 million monthly pull requests and 1 billion annual commits, representing 23% and 25% year-over-year growth respectively. This unprecedented volume signals that AI has fundamentally changed how software is built.

However, early AI coding assistants operated with limited context. They could understand individual files or functions but lacked awareness of broader codebase structure, historical decisions, and cross-file dependencies. This limitation meant developers still spent significant time explaining context or correcting suggestions that were syntactically correct but architecturally inappropriate.

Repository intelligence addresses this gap by analyzing entire codebases to understand not just individual lines of code but the relationships and history behind them. By examining patterns across the repository, these systems can determine what changed, why changes were made, and how different components interact.

Building Repository Intelligence Systems

Creating effective repository intelligence requires analyzing multiple dimensions of code beyond syntax:

Structural Analysis: Understanding the architectural patterns, module boundaries, and dependency graphs that define how code is organized. This enables the system to suggest changes that respect existing architectural decisions.

Historical Analysis: Examining commit history, pull request discussions, and issue tracking to understand why code evolved in particular ways. This context prevents suggestions that repeat past mistakes or ignore documented constraints.

Usage Analysis: Identifying how different parts of the codebase are actually used in production, which components are critical versus experimental, and where technical debt has accumulated. This prioritizes suggestions based on real impact.

Team Knowledge: Incorporating code review patterns, team conventions, and domain expertise encoded in documentation and discussions. This ensures suggestions align with team practices and domain requirements.

Production Deployment Patterns

Moving AI systems from development to production requires careful attention to reliability, observability, and operational concerns that may not be apparent during initial development.

Canary Deployments for AI Models

AI model behavior can be difficult to predict in production environments with real user data and edge cases not present in test datasets. Canary deployments gradually roll out changes while monitoring for issues:

# canary_deployment.py

from typing import Dict, List

import asyncio

from dataclasses import dataclass

@dataclass

class CanaryConfig:

baseline_deployment: str

canary_deployment: str

stages: List[Dict[str, int]] # [{"traffic_percent": 10, "duration_minutes": 30}, ...]

success_criteria: Dict[str, float]

class CanaryDeploymentManager:

def __init__(self, load_balancer, monitoring_service):

self.load_balancer = load_balancer

self.monitoring = monitoring_service

async def execute_canary_deployment(self, config: CanaryConfig) -> Dict:

deployment_id = f"canary-{config.canary_deployment}"

try:

# Start with no traffic to canary

await self.load_balancer.set_traffic_split({

config.baseline_deployment: 100,

config.canary_deployment: 0

})

# Execute each stage

for stage in config.stages:

traffic_percent = stage['traffic_percent']

duration_minutes = stage['duration_minutes']

# Shift traffic to canary

await self.load_balancer.set_traffic_split({

config.baseline_deployment: 100 - traffic_percent,

config.canary_deployment: traffic_percent

})

# Monitor for the stage duration

await asyncio.sleep(duration_minutes * 60)

# Check success criteria

metrics = await self.monitoring.get_deployment_metrics(

config.canary_deployment,

duration_minutes=duration_minutes

)

if not self._meets_success_criteria(metrics, config.success_criteria):

raise CanaryFailureException(

f"Canary failed at {traffic_percent}% traffic",

metrics

)

# All stages passed, complete rollout

await self.load_balancer.set_traffic_split({

config.baseline_deployment: 0,

config.canary_deployment: 100

})

return {

'status': 'success',

'deployment_id': deployment_id,

'final_metrics': metrics

}

except CanaryFailureException as e:

# Rollback to baseline

await self.load_balancer.set_traffic_split({

config.baseline_deployment: 100,

config.canary_deployment: 0

})

await self.monitoring.record_canary_failure(

deployment_id,

e.metrics

)

raise

def _meets_success_criteria(

self,

metrics: Dict[str, float],

criteria: Dict[str, float]

) -> bool:

for metric_name, threshold in criteria.items():

if metric_name not in metrics:

return False

if metrics[metric_name] < threshold:

return False

return True

class CanaryFailureException(Exception):

def __init__(self, message: str, metrics: Dict):

super().__init__(message)

self.metrics = metricsModel Performance Monitoring

AI models can degrade in production due to data drift, changing user behavior, or shifts in underlying data distributions. Comprehensive monitoring detects these issues before they impact users:

// model-monitoring.js

export class ModelMonitoringService {

constructor(config) {

this.metricsStore = config.metricsStore;

this.alerting = config.alertingService;

this.thresholds = config.thresholds;

}

async recordPrediction(deploymentId, prediction) {

const metrics = {

deploymentId,

timestamp: new Date(),

predictionId: prediction.id,

latency: prediction.latency,

confidence: prediction.confidence,

features: prediction.features,

prediction: prediction.result

};

await this.metricsStore.store(metrics);

// Check for anomalies

await this.checkAnomalies(deploymentId, metrics);

}

async recordFeedback(predictionId, feedback) {

const prediction = await this.metricsStore.getPrediction(predictionId);

await this.metricsStore.storeFeedback({

predictionId,

feedback,

timestamp: new Date()

});

// Calculate accuracy metrics

await this.updateAccuracyMetrics(prediction.deploymentId);

}

async checkAnomalies(deploymentId, currentMetrics) {

// Get baseline statistics

const baseline = await this.metricsStore.getBaselineStats(

deploymentId,

'7d'

);

// Check latency

if (currentMetrics.latency > baseline.latency.p99) {

await this.alerting.sendAlert({

severity: 'warning',

type: 'high_latency',

deploymentId,

current: currentMetrics.latency,

threshold: baseline.latency.p99

});

}

// Check confidence distribution

if (currentMetrics.confidence < this.thresholds.minConfidence) {

await this.alerting.sendAlert({

severity: 'warning',

type: 'low_confidence',

deploymentId,

current: currentMetrics.confidence,

threshold: this.thresholds.minConfidence

});

}

// Check for feature drift

const drift = await this.detectFeatureDrift(

currentMetrics.features,

baseline.features

);

if (drift.score > this.thresholds.maxDrift) {

await this.alerting.sendAlert({

severity: 'warning',

type: 'feature_drift',

deploymentId,

driftScore: drift.score,

threshold: this.thresholds.maxDrift,

driftedFeatures: drift.features

});

}

}

async detectFeatureDrift(currentFeatures, baselineFeatures) {

const driftScores = {};

let totalDrift = 0;

for (const [featureName, currentValue] of Object.entries(currentFeatures)) {

const baselineStats = baselineFeatures[featureName];

if (!baselineStats) continue;

// Calculate KL divergence or similar metric

const drift = this.calculateDrift(currentValue, baselineStats);

driftScores[featureName] = drift;

totalDrift += drift;

}

const avgDrift = totalDrift / Object.keys(currentFeatures).length;

const driftedFeatures = Object.entries(driftScores)

.filter(([_, score]) => score > this.thresholds.featureDriftThreshold)

.map(([name, _]) => name);

return {

score: avgDrift,

features: driftedFeatures,

details: driftScores

};

}

calculateDrift(currentValue, baselineStats) {

// Simplified drift calculation

// In production, use proper statistical tests

const zScore = Math.abs(

(currentValue - baselineStats.mean) / baselineStats.stdDev

);

return Math.min(zScore / 3, 1); // Normalize to 0-1

}

async updateAccuracyMetrics(deploymentId) {

const recentPredictions = await this.metricsStore.getPredictionsWithFeedback(

deploymentId,

'1h'

);

if (recentPredictions.length < 10) return; // Need minimum sample size

const correct = recentPredictions.filter(p => p.feedback.correct).length;

const accuracy = correct / recentPredictions.length;

await this.metricsStore.storeAccuracyMetric({

deploymentId,

timestamp: new Date(),

accuracy,

sampleSize: recentPredictions.length

});

if (accuracy < this.thresholds.minAccuracy) {

await this.alerting.sendAlert({

severity: 'critical',

type: 'accuracy_degradation',

deploymentId,

current: accuracy,

threshold: this.thresholds.minAccuracy

});

}

}

}Real-World Case Studies

Understanding how leading organizations implement these patterns provides concrete guidance for engineering teams planning their own deployments.

Financial Services: BBVA AI Factory

BBVA established one of the first AI factories in 2019, focusing initially on credit decisioning and fraud prevention. Their implementation demonstrates the value of centralized infrastructure for AI deployment.

The bank created a standardized platform combining data pipelines, model training infrastructure, and deployment automation. This enabled individual teams to deploy new AI capabilities in days rather than months. By 2026, BBVA reports deploying over 100 AI models in production, with the AI factory reducing average deployment time by 75%.

Key success factors included executive sponsorship that allocated dedicated infrastructure resources, creation of shared data assets accessible across teams, and investment in MLOps expertise that established deployment standards and best practices.

Healthcare: Clinical Workflow Orchestration

A large healthcare system implemented workflow orchestration to address gaps in care coordination. Their system integrates AI analysis of patient records with scheduling, care team coordination, and follow-up management.

The workflow begins when AI systems analyze patient records to identify individuals requiring preventive care or follow-up. The orchestration engine then coordinates scheduling, ensuring appropriate providers are available, patient preferences are respected, and necessary preparation occurs. After appointments, the system manages follow-up tasks, documentation, and handoffs to other care team members.

Early results show 30% improvement in care coordination metrics and 25% reduction in missed follow-ups. The implementation required significant attention to privacy and security, with comprehensive audit logging and access controls integrated throughout the workflow.

Software Development: GitHub Repository Intelligence

GitHub pioneered repository intelligence by analyzing patterns across millions of public and private repositories. Their system provides context-aware code suggestions that respect architectural patterns, coding conventions, and historical decisions.

The implementation processes repository structure, commit history, code review feedback, and issue discussions to build comprehensive understanding of each codebase. This enables suggestions that align with team practices and avoid patterns that proved problematic in the past.

Developers report that repository intelligence reduces time spent explaining context and correcting inappropriate suggestions. The system particularly excels at suggesting changes that maintain consistency across the codebase and respect established architectural boundaries.

Implementation Roadmap

Organizations planning to implement these patterns should follow a phased approach that builds capability incrementally while delivering value at each stage.

Phase 1: Foundation (Months 1 to 3) Establish core infrastructure including model registry, basic deployment automation, and monitoring foundations. Focus on deploying 2 to 3 pilot AI applications to validate the infrastructure and identify gaps.

Phase 2: Standardization (Months 3 to 6) Document deployment patterns, create reusable templates and components, and establish governance processes. Expand to 10 to 15 AI applications while refining operational procedures.

Phase 3: Scale (Months 6 to 12) Enable self-service deployment for teams, implement advanced orchestration capabilities, and optimize for efficiency. Target 50+ AI applications in production with consistent operational excellence.

Phase 4: Innovation (Months 12+) Leverage established infrastructure to rapidly experiment with new AI capabilities, implement advanced patterns like workflow orchestration and repository intelligence, and continuously optimize based on operational data.

Conclusion

The transition from AI demos to production systems represents a maturation of enterprise AI adoption. Organizations succeeding in this transition share common characteristics: systematic infrastructure investment, focus on workflow orchestration rather than point solutions, and commitment to operational excellence.

The AI factory pattern provides a proven approach to scaling AI deployment, reducing time from concept to production while maintaining reliability. Workflow orchestration unlocks value by coordinating activities across team and system boundaries. Repository intelligence and similar context-aware capabilities demonstrate how AI systems become more valuable as they understand the specific environment in which they operate.

Engineering teams implementing these patterns should start with solid infrastructure foundations, validate approaches with pilot projects, and scale systematically based on operational learnings. The code examples and architectural patterns presented here provide starting points that teams can adapt to their specific requirements and constraints.

The shift from hype to pragmatism in enterprise AI creates opportunities for organizations willing to invest in proper implementation. Those that build systematic capabilities rather than pursuing one-off projects will be positioned to capitalize on AI advances for years to come.

References

- IBM Think – The Trends That Will Shape AI and Tech in 2026

- Microsoft News – What’s Next in AI: 7 Trends to Watch in 2026

- TechCrunch – In 2026, AI Will Move from Hype to Pragmatism

- MIT Sloan Management Review – Five Trends in AI and Data Science for 2026

- MIT Technology Review – What’s Next for AI in 2026