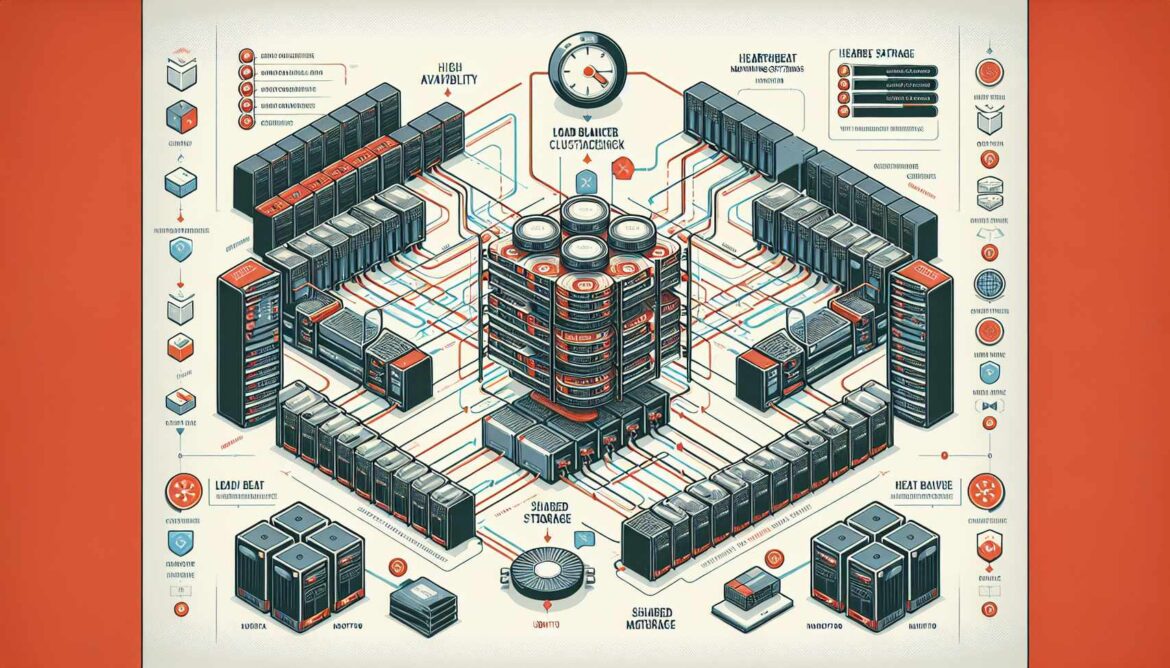

Welcome to Part 15 of our comprehensive NGINX on Ubuntu series! We’ll implement high availability and clustering solutions to ensure zero-downtime operations, automatic failover, and fault-tolerant infrastructure.

High Availability Fundamentals

High Availability (HA) ensures continuous service operation through redundancy, automatic failover, and fault tolerance, minimizing downtime and maintaining service quality during failures.

graph TD

A[Clients] --> B[Virtual IP - VIP]

B --> C[Primary NGINX Node]

B --> D[Secondary NGINX Node]

C --> E[Shared Backend Pool]

D --> E

E --> F[Backend Server 1]

E --> G[Backend Server 2]

E --> H[Backend Server 3]

I[HA Components] --> J[Keepalived]

I --> K[Heartbeat Monitoring]

I --> L[Automatic Failover]

I --> M[Shared Configuration]

I --> N[Health Checks]

style B fill:#e1f5fe

style C fill:#e8f5e8

style D fill:#fff3e0

style I fill:#e3f2fd

Keepalived Setup

# Install Keepalived on both NGINX nodes

sudo apt update

sudo apt install keepalived -y

# Enable IP forwarding

echo 'net.ipv4.ip_forward = 1' | sudo tee -a /etc/sysctl.conf

echo 'net.ipv4.ip_nonlocal_bind = 1' | sudo tee -a /etc/sysctl.conf

sudo sysctl -p

# Check network interface

ip addr showPrimary Node Configuration

# Configure Keepalived on Primary Node

sudo nano /etc/keepalived/keepalived.conf# Keepalived configuration for Primary Node

global_defs {

router_id NGINX_PRIMARY

script_user root

enable_script_security

}

# NGINX health check script

vrrp_script nginx_check {

script "/usr/local/bin/nginx-health-check.sh"

interval 2

timeout 3

rise 2

fall 3

weight -10

}

# Virtual Router Redundancy Protocol instance

vrrp_instance NGINX_HA {

state MASTER

interface eth0 # Change to your interface

virtual_router_id 100

priority 110

advert_int 1

authentication {

auth_type PASS

auth_pass nginx_ha_2024

}

# Virtual IP addresses

virtual_ipaddress {

192.168.1.100/24 # Your VIP

}

# Track scripts

track_script {

nginx_check

}

# Notification scripts

notify_master "/usr/local/bin/nginx-master-notify.sh"

notify_backup "/usr/local/bin/nginx-backup-notify.sh"

notify_fault "/usr/local/bin/nginx-fault-notify.sh"

}Secondary Node Configuration

# Configure Keepalived on Secondary Node

sudo nano /etc/keepalived/keepalived.conf# Keepalived configuration for Secondary Node

global_defs {

router_id NGINX_SECONDARY

script_user root

enable_script_security

}

# NGINX health check script

vrrp_script nginx_check {

script "/usr/local/bin/nginx-health-check.sh"

interval 2

timeout 3

rise 2

fall 3

weight -10

}

# Virtual Router Redundancy Protocol instance

vrrp_instance NGINX_HA {

state BACKUP

interface eth0 # Change to your interface

virtual_router_id 100

priority 100 # Lower priority than primary

advert_int 1

authentication {

auth_type PASS

auth_pass nginx_ha_2024

}

# Virtual IP addresses (same as primary)

virtual_ipaddress {

192.168.1.100/24

}

# Track scripts

track_script {

nginx_check

}

# Notification scripts

notify_master "/usr/local/bin/nginx-master-notify.sh"

notify_backup "/usr/local/bin/nginx-backup-notify.sh"

notify_fault "/usr/local/bin/nginx-fault-notify.sh"

}Health Check Script

# Create NGINX health check script (same on both nodes)

sudo nano /usr/local/bin/nginx-health-check.sh#!/bin/bash

# NGINX Health Check for Keepalived

LOG_FILE="/var/log/nginx/ha-health.log"

log_event() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" >> "$LOG_FILE"

}

# Check if NGINX process is running

if ! pgrep nginx > /dev/null; then

log_event "NGINX process not running"

exit 1

fi

# Check if NGINX responds to HTTP requests

if ! curl -f -s --max-time 5 http://localhost/health > /dev/null 2>&1; then

log_event "NGINX not responding to HTTP requests"

exit 1

fi

# Check NGINX configuration

if ! nginx -t > /dev/null 2>&1; then

log_event "NGINX configuration test failed"

exit 1

fi

# Check if we can bind to the VIP (when master)

VIP="192.168.1.100"

if ip addr show | grep -q "$VIP"; then

if ! curl -f -s --max-time 5 "http://$VIP/health" > /dev/null 2>&1; then

log_event "Cannot serve content on VIP $VIP"

exit 1

fi

fi

# Check system load

LOAD=$(uptime | awk -F'load average:' '{print $2}' | awk '{print $1}' | sed 's/,//')

if (( $(echo "$LOAD > 10.0" | bc -l) )); then

log_event "System load too high: $LOAD"

exit 1

fi

log_event "Health check passed"

exit 0

# Make executable: sudo chmod +x /usr/local/bin/nginx-health-check.shNotification Scripts

# Create master notification script

sudo nano /usr/local/bin/nginx-master-notify.sh#!/bin/bash

# NGINX Master Notification Script

HOSTNAME=$(hostname)

VIP="192.168.1.100"

LOG_FILE="/var/log/nginx/ha-events.log"

log_event() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" | tee -a "$LOG_FILE"

}

log_event "MASTER: $HOSTNAME became MASTER for VIP $VIP"

# Ensure NGINX is running

if ! systemctl is-active --quiet nginx; then

log_event "Starting NGINX service"

systemctl start nginx

fi

# Send notification

SUBJECT="NGINX HA: $HOSTNAME is now MASTER"

MESSAGE="$HOSTNAME has become MASTER for VIP $VIP at $(date)"

log_event "Master transition completed successfully"

# Make executable: sudo chmod +x /usr/local/bin/nginx-master-notify.sh# Create backup notification script

sudo nano /usr/local/bin/nginx-backup-notify.sh#!/bin/bash

# NGINX Backup Notification Script

HOSTNAME=$(hostname)

VIP="192.168.1.100"

LOG_FILE="/var/log/nginx/ha-events.log"

log_event() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" | tee -a "$LOG_FILE"

}

log_event "BACKUP: $HOSTNAME became BACKUP for VIP $VIP"

# Ensure NGINX is running for health checks

if ! systemctl is-active --quiet nginx; then

log_event "Starting NGINX service for health checks"

systemctl start nginx

fi

log_event "Backup transition completed"

# Make executable: sudo chmod +x /usr/local/bin/nginx-backup-notify.sh# Create fault notification script

sudo nano /usr/local/bin/nginx-fault-notify.sh#!/bin/bash

# NGINX Fault Notification Script

HOSTNAME=$(hostname)

VIP="192.168.1.100"

LOG_FILE="/var/log/nginx/ha-events.log"

log_event() {

echo "[$(date '+%Y-%m-%d %H:%M:%S')] $1" | tee -a "$LOG_FILE"

}

log_event "FAULT: $HOSTNAME entered FAULT state for VIP $VIP"

# Try to restart NGINX

log_event "Attempting to restart NGINX service"

systemctl restart nginx

if systemctl is-active --quiet nginx; then

log_event "NGINX restart successful"

else

log_event "NGINX restart failed"

fi

log_event "Fault notification sent"

# Make executable: sudo chmod +x /usr/local/bin/nginx-fault-notify.shShared NGINX Configuration

# Create identical NGINX configuration on both nodes

sudo nano /etc/nginx/sites-available/ha-cluster.example.com# High Availability NGINX Configuration

upstream backend_cluster {

server 192.168.1.10:8080 max_fails=3 fail_timeout=30s;

server 192.168.1.11:8080 max_fails=3 fail_timeout=30s;

server 192.168.1.12:8080 max_fails=3 fail_timeout=30s;

server 192.168.1.13:8080 backup;

keepalive 32;

}

# Server listening on VIP

server {

listen 192.168.1.100:80; # VIP address

listen 80; # All interfaces

server_name ha-cluster.example.com;

# Health check endpoint for Keepalived

location /health {

access_log off;

return 200 "OK\n";

add_header Content-Type text/plain;

add_header X-Server-Name $hostname always;

add_header X-Server-IP $server_addr always;

}

# Main application

location / {

proxy_pass http://backend_cluster;

# Standard proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# HA-specific headers

proxy_set_header X-HA-Node $hostname;

proxy_set_header X-HA-VIP $server_addr;

# Failover settings

proxy_next_upstream error timeout http_500 http_502 http_503 http_504;

proxy_next_upstream_tries 3;

proxy_next_upstream_timeout 30s;

# Timeouts

proxy_connect_timeout 5s;

proxy_send_timeout 10s;

proxy_read_timeout 10s;

# Response headers

add_header X-Served-By $hostname always;

add_header X-Upstream-Server $upstream_addr always;

}

# Status endpoint

location /nginx-status {

stub_status on;

access_log off;

allow 127.0.0.1;

allow 192.168.1.0/24;

deny all;

add_header X-Server-Name $hostname always;

}

}Configuration Synchronization

graph TD

A[Config Management] --> B[Git Repository]

A --> C[Rsync Sync]

A --> D[Shared Storage]

B --> E[Version Control

Change Tracking

Rollback]

C --> F[Real-time Sync

Automated Deploy

Consistency]

D --> G[NFS/GlusterFS

Central Storage

Shared Access]

H[Sync Methods] --> I[Push-based]

H --> J[Pull-based]

H --> K[Event-driven]

style A fill:#e1f5fe

style H fill:#e8f5e8

style E fill:#fff3e0

style F fill:#e3f2fd

style G fill:#e8f5e8

# Create configuration sync script

sudo nano /usr/local/bin/nginx-config-sync.sh#!/bin/bash

# NGINX Configuration Sync Script

PRIMARY_NODE="192.168.1.101"

SECONDARY_NODE="192.168.1.102"

CONFIG_DIRS=(

"/etc/nginx/sites-available"

"/etc/nginx/sites-enabled"

"/etc/nginx/snippets"

)

sync_configs() {

local source_node="$1"

local target_node="$2"

echo "Syncing configs from $source_node to $target_node"

for config_dir in "${CONFIG_DIRS[@]}"; do

rsync -avz --delete \

"$config_dir/" \

"root@$target_node:$config_dir/" \

--exclude="*.tmp"

if [ $? -eq 0 ]; then

echo "Successfully synced $config_dir"

else

echo "Failed to sync $config_dir"

return 1

fi

done

# Test config on target

ssh "root@$target_node" "nginx -t"

if [ $? -eq 0 ]; then

ssh "root@$target_node" "systemctl reload nginx"

echo "Config sync completed"

else

echo "Config test failed on $target_node"

return 1

fi

}

# Sync based on current node

CURRENT_IP=$(hostname -I | awk '{print $1}')

case "$CURRENT_IP" in

"$PRIMARY_NODE")

sync_configs "$PRIMARY_NODE" "$SECONDARY_NODE"

;;

"$SECONDARY_NODE")

sync_configs "$SECONDARY_NODE" "$PRIMARY_NODE"

;;

*)

echo "Unknown node IP: $CURRENT_IP"

exit 1

;;

esac

# Make executable: sudo chmod +x /usr/local/bin/nginx-config-sync.shHA Monitoring

# Create HA monitoring script

sudo nano /usr/local/bin/nginx-ha-monitor.sh#!/bin/bash

# NGINX HA Monitor

VIP="192.168.1.100"

show_ha_status() {

echo "=== NGINX High Availability Status ==="

echo "Generated: $(date)"

echo

# VIP status

echo "--- Virtual IP Status ---"

if ip addr show | grep -q "$VIP"; then

echo "✅ This node ($(hostname)) has VIP $VIP"

echo "Status: MASTER"

else

echo "⚪ This node ($(hostname)) does not have VIP $VIP"

echo "Status: BACKUP"

fi

echo

# Keepalived status

echo "--- Keepalived Status ---"

if systemctl is-active --quiet keepalived; then

echo "✅ Keepalived is running"

else

echo "❌ Keepalived is not running"

fi

echo

# NGINX status

echo "--- NGINX Status ---"

if systemctl is-active --quiet nginx; then

echo "✅ NGINX is running"

# Test VIP connectivity

if curl -f -s --max-time 3 "http://$VIP/health" > /dev/null 2>&1; then

echo "✅ VIP health check passed"

else

echo "❌ VIP health check failed"

fi

else

echo "❌ NGINX is not running"

fi

echo

# Recent events

echo "--- Recent HA Events ---"

tail -5 /var/log/nginx/ha-events.log 2>/dev/null || echo "No recent events"

}

force_failover() {

echo "Forcing failover by stopping Keepalived..."

systemctl stop keepalived

echo "Keepalived stopped. Failover should occur within seconds."

}

case "${1:-status}" in

status)

show_ha_status

;;

failover)

force_failover

;;

watch)

while true; do

clear

show_ha_status

echo "Press Ctrl+C to exit..."

sleep 5

done

;;

*)

echo "Usage: $0 {status|failover|watch}"

;;

esac

# Make executable: sudo chmod +x /usr/local/bin/nginx-ha-monitor.shTesting HA Setup

# Setup and testing commands

# 1. Enable sites on both nodes

sudo ln -s /etc/nginx/sites-available/ha-cluster.example.com /etc/nginx/sites-enabled/

# 2. Test config on both nodes

sudo nginx -t

# 3. Start services on both nodes

sudo systemctl enable nginx keepalived

sudo systemctl start nginx keepalived

# 4. Check HA status

/usr/local/bin/nginx-ha-monitor.sh status

# 5. Test VIP connectivity

ping 192.168.1.100

curl http://192.168.1.100/health

# 6. Test failover - on primary node:

sudo systemctl stop keepalived

# Check if VIP moves to secondary

# 7. Test service failover - on master:

sudo systemctl stop nginx

# Check Keepalived detects failure

# 8. Monitor events

tail -f /var/log/nginx/ha-events.log

# 9. Watch status

/usr/local/bin/nginx-ha-monitor.sh watch

# 10. Test config sync

/usr/local/bin/nginx-config-sync.shWhat’s Next?

Excellent! You’ve implemented a robust high availability NGINX cluster with automatic failover, health monitoring, and configuration synchronization. Your infrastructure now provides zero-downtime operations and fault tolerance.

Coming up in Part 16: NGINX Performance Tuning and Optimization

References

This is Part 15 of our 22-part NGINX series. Your server now has enterprise-grade high availability! Next, we’ll fine-tune performance. Questions? Share them in the comments!