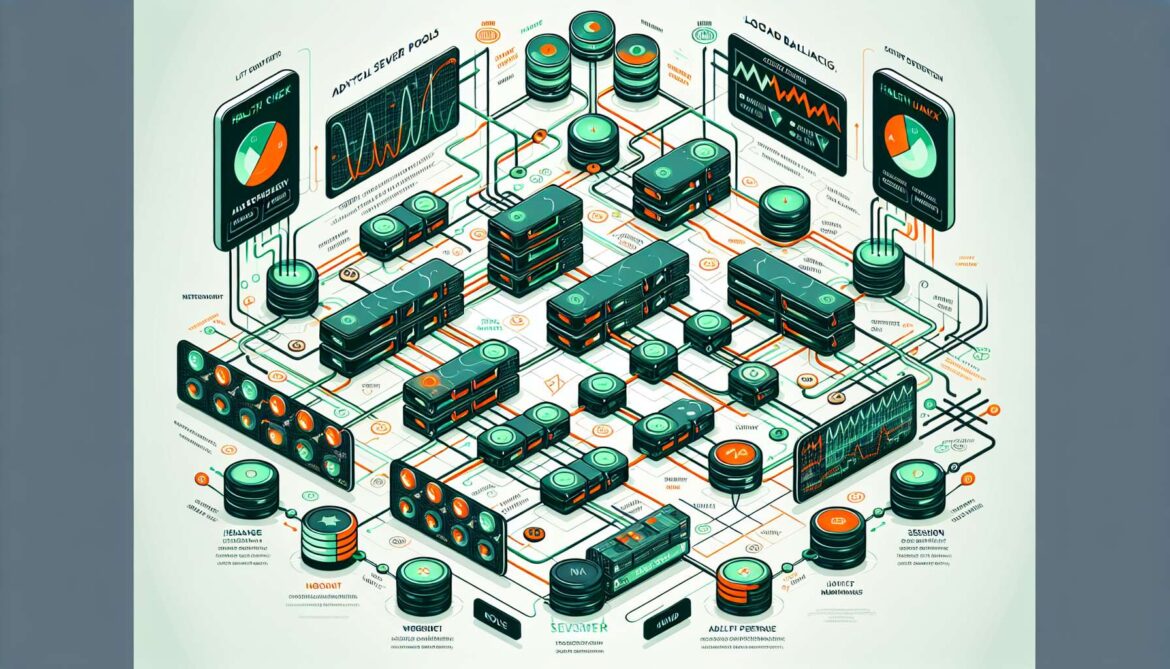

Welcome to Part 7A of our comprehensive NGINX on Ubuntu series! Building on our reverse proxy foundation, we’ll now explore load balancing methods and strategies to distribute traffic efficiently across multiple backend servers.

Understanding Load Balancing

Load balancing distributes incoming requests across multiple backend servers, improving performance, reliability, and scalability. NGINX offers several algorithms, each suited for different scenarios.

graph TD

A[Client Requests] --> B[NGINX Load Balancer]

B --> C{Load Balancing Algorithm}

C -->|Round Robin| D[Server 1]

C -->|Round Robin| E[Server 2]

C -->|Round Robin| F[Server 3]

C -->|Round Robin| G[Server 4]

H[Benefits] --> I[High Availability]

H --> J[Better Performance]

H --> K[Scalability]

H --> L[Fault Tolerance]

style B fill:#e1f5fe

style C fill:#fff3e0

style D fill:#e8f5e8

style E fill:#e8f5e8

style F fill:#e8f5e8

style G fill:#e8f5e8

Load Balancing Methods

1. Round Robin (Default)

Distributes requests evenly across all servers in sequential order. Best for servers with similar specifications.

# Round robin load balancing (default behavior)

upstream backend_roundrobin {

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

# Keep alive connections for better performance

keepalive 32;

}2. Least Connections

Routes requests to the server with the fewest active connections. Ideal for applications with long-running requests.

# Least connections - ideal for long-running requests

upstream backend_leastconn {

least_conn;

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

keepalive 32;

}3. IP Hash (Session Persistence)

Uses client IP address to determine which server handles the request, ensuring the same client always goes to the same server.

# IP hash - ensures same client always goes to same server

upstream backend_iphash {

ip_hash;

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

}

# Alternative: Hash with custom key

upstream backend_customhash {

hash $request_uri consistent; # Can also use $cookie_sessionid

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

}4. Weighted Distribution

Assigns different weights to servers based on their capacity, sending more traffic to higher-spec servers.

# Weighted distribution based on server capacity

upstream backend_weighted {

# High-performance server gets more traffic

server 192.168.1.10:3000 weight=5;

server 192.168.1.11:3000 weight=3;

server 192.168.1.12:3000 weight=2;

# Low-spec server gets less traffic

server 192.168.1.13:3000 weight=1;

keepalive 32;

}5. Random Load Balancing

Randomly selects servers, with optional bias toward least connected servers (NGINX 1.15.1+).

# Random selection (NGINX 1.15.1+)

upstream backend_random {

random two least_conn; # Pick 2 random servers, choose least connected

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

}Server Parameters and Options

NGINX provides various server parameters to control load balancing behavior and handle server failures.

# Advanced server configuration with parameters

upstream backend_advanced {

least_conn;

# Primary servers with health monitoring

server 192.168.1.10:3000 weight=3 max_fails=3 fail_timeout=30s;

server 192.168.1.11:3000 weight=3 max_fails=3 fail_timeout=30s;

# Secondary server with different settings

server 192.168.1.12:3000 weight=2 max_fails=2 fail_timeout=20s;

# Backup server (only used when all others fail)

server 192.168.1.13:3000 backup;

# Server in maintenance (temporarily disabled)

server 192.168.1.14:3000 down;

# Connection settings

keepalive 32;

keepalive_requests 100;

keepalive_timeout 60s;

}Server Parameter Explanation

- weight=N: Relative weight for load distribution (default: 1)

- max_fails=N: Number of failed attempts before marking server unavailable

- fail_timeout=time: Time to wait before retrying a failed server

- backup: Server only used when all primary servers are down

- down: Permanently mark server as unavailable

- slow_start=time: Gradually increase traffic to recovering server

Creating Load Balanced Virtual Hosts

# Complete load balanced virtual host

sudo nano /etc/nginx/sites-available/loadbalanced.example.comupstream web_backend {

least_conn;

server 192.168.1.10:3000 weight=3 max_fails=3 fail_timeout=30s;

server 192.168.1.11:3000 weight=3 max_fails=3 fail_timeout=30s;

server 192.168.1.12:3000 weight=2 max_fails=2 fail_timeout=20s;

server 192.168.1.13:3000 backup;

keepalive 32;

}

server {

listen 80;

server_name loadbalanced.example.com;

location / {

proxy_pass http://web_backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Load balancer timeouts

proxy_connect_timeout 5s;

proxy_send_timeout 10s;

proxy_read_timeout 10s;

# Add load balancer headers for debugging

add_header X-Upstream-Server $upstream_addr;

add_header X-Upstream-Response-Time $upstream_response_time;

}

# Health check endpoint

location /lb-health {

access_log off;

return 200 "Load balancer is healthy\n";

add_header Content-Type text/plain;

}

}Session Persistence Strategies

graph TD

A[Client with Session] --> B[Load Balancer]

B --> C{Persistence Method}

C -->|IP Hash| D[Hash Client IPSame Server Always]

C -->|Cookie Hash| E[Hash Session CookieConsistent Routing]

C -->|URI Hash| F[Hash Request URICache-friendly]

D --> G[Server Pool]

E --> G

F --> G

H[Session Storage] --> I[Shared Redis]

H --> J[Database Sessions]

H --> K[Sticky Sessions]

style B fill:#e1f5fe

style C fill:#fff3e0

style G fill:#e8f5e8

style H fill:#e8f5e8

Cookie-Based Session Persistence

# Session persistence using cookies

upstream backend_sessions {

hash $cookie_sessionid consistent;

server 192.168.1.10:3000;

server 192.168.1.11:3000;

server 192.168.1.12:3000;

server 192.168.1.13:3000;

}

server {

listen 80;

server_name sessions.example.com;

location / {

proxy_pass http://backend_sessions;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Ensure session cookie is passed

proxy_set_header Cookie $http_cookie;

}

}User-Type Based Routing

# Route based on user type header

map $http_x_user_type $backend_pool {

default backend_general;

"premium" backend_premium;

"api" backend_api;

"admin" backend_admin;

}

upstream backend_general {

server 192.168.1.10:3000;

server 192.168.1.11:3000;

}

upstream backend_premium {

server 192.168.1.20:3000;

server 192.168.1.21:3000;

}

upstream backend_api {

server 192.168.1.30:3000;

server 192.168.1.31:3000;

}

upstream backend_admin {

server 192.168.1.40:3000;

}

server {

listen 80;

server_name routed.example.com;

location / {

proxy_pass http://$backend_pool;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}Testing Load Balancing

# Enable the load balanced site

sudo ln -s /etc/nginx/sites-available/loadbalanced.example.com /etc/nginx/sites-enabled/

# Test configuration

sudo nginx -t

# Reload NGINX

sudo systemctl reload nginx

# Add to hosts file for testing

echo "127.0.0.1 loadbalanced.example.com" | sudo tee -a /etc/hosts

# Test round-robin distribution

for i in {1..10}; do

curl -s -H "Host: loadbalanced.example.com" http://localhost/ | grep -o "Server: [0-9.]*"

done

# Test with session persistence

for i in {1..5}; do

curl -s -H "Host: sessions.example.com" -H "Cookie: sessionid=user123" http://localhost/ | grep -o "Server: [0-9.]*"

done

# Load testing with Apache Bench

ab -n 1000 -c 10 -H "Host: loadbalanced.example.com" http://localhost/Monitoring Load Distribution

# Create simple load monitoring script

sudo nano /usr/local/bin/monitor-load.sh#!/bin/bash

# Simple load balancing monitor

echo "=== Load Balancing Monitor ==="

echo "Timestamp: $(date)"

echo

# Check backend server responses

SERVERS=("192.168.1.10:3000" "192.168.1.11:3000" "192.168.1.12:3000")

for server in "${SERVERS[@]}"; do

if curl -f -s --max-time 3 "http://$server/health" > /dev/null 2>&1; then

echo "✅ $server - Healthy"

else

echo "❌ $server - Unhealthy"

fi

done

echo

echo "Active connections:"

netstat -an | grep :80 | grep ESTABLISHED | wc -l

# Make executable

# sudo chmod +x /usr/local/bin/monitor-load.shCommon Load Balancing Scenarios

Scenario 1: Web Application with Database

# Web tier load balancing

upstream web_tier {

least_conn;

server 192.168.1.10:3000 weight=2;

server 192.168.1.11:3000 weight=2;

server 192.168.1.12:3000 weight=1 backup;

}

# API tier load balancing

upstream api_tier {

round_robin;

server 192.168.1.20:4000;

server 192.168.1.21:4000;

server 192.168.1.22:4000;

}Scenario 2: Microservices Architecture

# User service

upstream user_service {

server 192.168.1.30:3001;

server 192.168.1.31:3001;

}

# Order service

upstream order_service {

server 192.168.1.40:3002;

server 192.168.1.41:3002;

}

# Payment service

upstream payment_service {

server 192.168.1.50:3003;

server 192.168.1.51:3003;

}What’s Next?

Great! You’ve learned the fundamental load balancing methods and how to configure them in NGINX. You can now distribute traffic across multiple servers using different algorithms based on your needs.

Coming up in Part 7B: Advanced Health Checks, Monitoring, and Failover Strategies

References

This is Part 7A of our 22-part NGINX series. You’re mastering load balancing fundamentals! Part 7B will cover advanced health checks and monitoring. Questions? Share them in the comments!