- Real-Time WebSocket Architecture Series: Part 1 – Understanding WebSocket Fundamentals

- Real-Time WebSocket Architecture Series: Part 2 – Building Your First WebSocket Server (Node.js)

- Real-Time WebSocket Architecture Series: Part 3 – Essential Features (Rooms, Namespaces & Events)

- Real-Time WebSocket Architecture Series: Part 4 – Authentication & Security

- Real-Time WebSocket Architecture Series: Part 5 – Scaling with Redis

- Real-Time WebSocket Architecture Series: Part 6 – Production-Grade Features

- Real-Time WebSocket Architecture Series: Part 7 – Serverless WebSocket Implementation with AWS Lambda

- Real-Time WebSocket Architecture Series: Part 8 – Edge Computing WebSockets with Ultra-Low Latency

Welcome to Part 5! We’ve built a secure WebSocket application, but what happens when you need to handle 10,000+ concurrent users? A single server won’t cut it. This guide covers horizontal scaling using Redis, load balancing strategies, and sticky sessions.

The Scaling Challenge

A single Node.js server can typically handle 5,000-10,000 concurrent WebSocket connections. Beyond that, you’ll experience:

- Memory exhaustion: Each connection consumes memory

- CPU bottlenecks: Event loop blocking

- Network saturation: Bandwidth limits

- Single point of failure: No redundancy

Solution: Horizontal scaling with multiple servers coordinated through Redis.

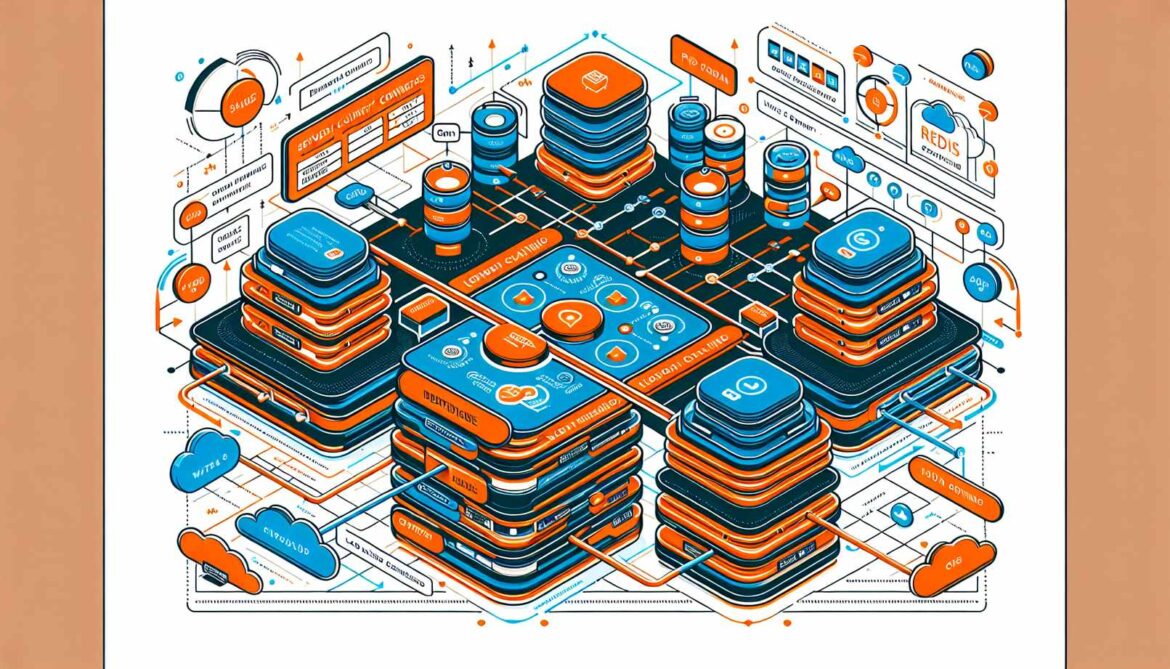

Architecture Overview

graph TB

Client1[Client 1]

Client2[Client 2]

Client3[Client 3]

Client4[Client 4]

LB[Load BalancerSticky Sessions]

Server1[Socket.IO Server 1Port 3001]

Server2[Socket.IO Server 2Port 3002]

Server3[Socket.IO Server 3Port 3003]

Redis[(RedisPub/Sub)]

Client1 --> LB

Client2 --> LB

Client3 --> LB

Client4 --> LB

LB -->|Session Affinity| Server1

LB -->|Session Affinity| Server2

LB -->|Session Affinity| Server3

Server1 <-->|Pub/Sub| Redis

Server2 <-->|Pub/Sub| Redis

Server3 <-->|Pub/Sub| Redis

style LB fill:#4299e1,color:#fff

style Redis fill:#dc2626,color:#fff

style Server1 fill:#10b981,color:#fff

style Server2 fill:#10b981,color:#fff

style Server3 fill:#10b981,color:#fffHow It Works

- Load Balancer distributes incoming connections across multiple servers

- Sticky Sessions ensure a client always connects to the same server

- Redis Adapter synchronizes events across all servers using Pub/Sub

- Message Broadcasting works seamlessly across the cluster

Implementation

Step 1: Install Redis Adapter

npm install @socket.io/redis-adapter redisStep 2: Setup Redis Connection

// redis/client.js

const { createClient } = require('redis');

class RedisClient {

static async createPubSubClients() {

const pubClient = createClient({

url: process.env.REDIS_URL || 'redis://localhost:6379',

socket: {

reconnectStrategy: (retries) => {

if (retries > 10) {

return new Error('Too many retries');

}

return retries * 100;

}

}

});

const subClient = pubClient.duplicate();

// Error handling

pubClient.on('error', (err) => {

console.error('Redis Pub Client Error:', err);

});

subClient.on('error', (err) => {

console.error('Redis Sub Client Error:', err);

});

// Connect both clients

await Promise.all([

pubClient.connect(),

subClient.connect()

]);

console.log('✅ Redis clients connected');

return { pubClient, subClient };

}

}

module.exports = RedisClient;Step 3: Update Server with Redis Adapter

// server.js

require('dotenv').config();

const express = require('express');

const http = require('http');

const { Server } = require('socket.io');

const { createAdapter } = require('@socket.io/redis-adapter');

const RedisClient = require('./redis/client');

const app = express();

const server = http.createServer(app);

// Server instance ID

const SERVER_ID = process.env.SERVER_ID || `server-${process.pid}`;

const PORT = process.env.PORT || 3000;

async function startServer() {

try {

// Create Redis clients

const { pubClient, subClient } = await RedisClient.createPubSubClients();

// Initialize Socket.io with Redis adapter

const io = new Server(server, {

adapter: createAdapter(pubClient, subClient),

cors: {

origin: process.env.ALLOWED_ORIGINS.split(','),

credentials: true

}

});

// Track connections on this server

let connectionCount = 0;

io.on('connection', (socket) => {

connectionCount++;

console.log(`[${SERVER_ID}] New connection: ${socket.id}`);

console.log(`[${SERVER_ID}] Total connections: ${connectionCount}`);

// Broadcast server info

socket.emit('server-info', {

serverId: SERVER_ID,

timestamp: new Date().toISOString()

});

socket.on('message', (data) => {

// This will be broadcast to ALL servers via Redis

io.emit('message', {

serverId: SERVER_ID,

socketId: socket.id,

message: data.message,

timestamp: new Date().toISOString()

});

});

socket.on('disconnect', () => {

connectionCount--;

console.log(`[${SERVER_ID}] Disconnected: ${socket.id}`);

console.log(`[${SERVER_ID}] Total connections: ${connectionCount}`);

});

});

// Server-to-server communication

io.of('/').adapter.on('create-room', (room) => {

console.log(`[${SERVER_ID}] Room created: ${room}`);

});

io.of('/').adapter.on('join-room', (room, id) => {

console.log(`[${SERVER_ID}] Socket ${id} joined room: ${room}`);

});

server.listen(PORT, () => {

console.log(`✅ [${SERVER_ID}] Server running on port ${PORT}`);

});

} catch (error) {

console.error('Failed to start server:', error);

process.exit(1);

}

}

startServer();Step 4: Environment Configuration

# .env

PORT=3001

SERVER_ID=server-1

REDIS_URL=redis://localhost:6379

ALLOWED_ORIGINS=http://localhost:3000Step 5: Run Multiple Instances

Create separate env files for each server:

# Terminal 1 - Server Instance 1

PORT=3001 SERVER_ID=server-1 node server.js

# Terminal 2 - Server Instance 2

PORT=3002 SERVER_ID=server-2 node server.js

# Terminal 3 - Server Instance 3

PORT=3003 SERVER_ID=server-3 node server.jsLoad Balancing Strategies

Option 1: Nginx Load Balancer

# nginx.conf

http {

upstream socketio_nodes {

ip_hash; # Sticky sessions based on IP

server localhost:3001 max_fails=3 fail_timeout=30s;

server localhost:3002 max_fails=3 fail_timeout=30s;

server localhost:3003 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name your-domain.com;

location / {

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host;

proxy_pass http://socketio_nodes;

# WebSocket support

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

# Timeouts

proxy_connect_timeout 7d;

proxy_send_timeout 7d;

proxy_read_timeout 7d;

}

}

}Option 2: Node.js Cluster with Sticky Module

// cluster.js

const cluster = require('cluster');

const http = require('http');

const { Server } = require('socket.io');

const { setupMaster, setupWorker } = require('@socket.io/sticky');

const { createAdapter } = require('@socket.io/redis-adapter');

const RedisClient = require('./redis/client');

const numCPUs = require('os').cpus().length;

if (cluster.isMaster) {

console.log(`Master ${process.pid} is running`);

const httpServer = http.createServer();

// Setup sticky sessions

setupMaster(httpServer, {

loadBalancingMethod: 'least-connection'

});

httpServer.listen(3000);

// Fork workers

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker) => {

console.log(`Worker ${worker.process.pid} died, forking new worker`);

cluster.fork();

});

} else {

console.log(`Worker ${process.pid} started`);

const httpServer = http.createServer();

(async () => {

const { pubClient, subClient } = await RedisClient.createPubSubClients();

const io = new Server(httpServer, {

adapter: createAdapter(pubClient, subClient)

});

setupWorker(io);

io.on('connection', (socket) => {

console.log(`[Worker ${process.pid}] Client connected: ${socket.id}`);

socket.on('message', (data) => {

io.emit('message', {

workerId: process.pid,

message: data

});

});

});

})();

}Understanding Redis Pub/Sub

When you emit an event, here’s what happens:

sequenceDiagram

participant U1 as User 1(Server 1)

participant S1 as Server 1

participant Redis

participant S2 as Server 2

participant S3 as Server 3

participant U2 as User 2(Server 2)

participant U3 as User 3(Server 3)

U1->>S1: Send "Hello"

S1->>S1: Emit to local clients

S1->>U1: Receive "Hello"

S1->>Redis: Publish message

Redis->>S2: Forward message

Redis->>S3: Forward message

S2->>U2: Receive "Hello"

S3->>U3: Receive "Hello"Sticky Sessions Explained

Why needed? Socket.IO maintains connection state in memory. Without sticky sessions, reconnections might go to different servers, losing state.

Sticky Session Methods

- IP Hash: Route based on client IP (simple but issues with proxies)

- Cookie-based: Use a session cookie (reliable)

- Connection ID: Route based on Socket.IO session ID (most reliable)

Monitoring and Health Checks

// monitoring.js

class MonitoringService {

static setupHealthChecks(io) {

const adapter = io.of('/').adapter;

// Monitor Redis connection

adapter.on('error', (err) => {

console.error('Adapter error:', err);

// Alert your monitoring system

});

// Get server metrics

setInterval(async () => {

const sockets = await io.fetchSockets();

const rooms = adapter.rooms;

console.log({

serverId: process.env.SERVER_ID,

connections: sockets.length,

rooms: rooms.size,

timestamp: new Date().toISOString()

});

}, 30000); // Every 30 seconds

}

static async getClusterStats(io) {

const sockets = await io.fetchSockets();

const serverSockets = await io.serverSideEmit('getStats');

return {

totalConnections: sockets.length,

serversOnline: serverSockets.length

};

}

}

module.exports = MonitoringService;Performance Optimization

1. Message Size Limits

const io = new Server(server, {

maxHttpBufferSize: 1e6, // 1MB

pingTimeout: 60000,

pingInterval: 25000

});2. Connection Pooling

const pubClient = createClient({

socket: {

keepAlive: true,

noDelay: true

}

});3. Room Optimization

// Instead of broadcasting to all

io.emit('message', data);

// Target specific rooms

io.to('room-123').emit('message', data);Best Practices

- Use Redis Cluster for high availability

- Implement health checks for each server

- Monitor Redis connection status

- Set appropriate timeouts for connections

- Use rooms efficiently to reduce broadcast overhead

- Implement graceful shutdown procedures

- Log server-specific events for debugging

- Test failover scenarios regularly

Testing Your Scaled Setup

// test-scaling.js

const io = require('socket.io-client');

async function testScaling() {

const clients = [];

// Create 100 connections

for (let i = 0; i < 100; i++) {

const socket = io('http://localhost:80', {

auth: { token: 'test-token' }

});

socket.on('server-info', (data) => {

console.log(`Client ${i} connected to ${data.serverId}`);

});

clients.push(socket);

// Stagger connections

await new Promise(resolve => setTimeout(resolve, 10));

}

// Test message broadcasting

clients[0].emit('message', { text: 'Hello from client 0' });

// Wait for messages

await new Promise(resolve => setTimeout(resolve, 1000));

// Disconnect all

clients.forEach(s => s.disconnect());

}

testScaling();What’s Next

In Part 6: Production-Grade Features, we’ll implement reconnection strategies, heartbeat mechanisms, message acknowledgments, and graceful shutdown!

Part 5 of the 8-part Real-Time WebSocket Architecture Series.