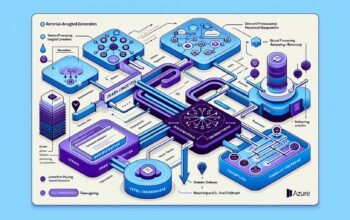

In this part, we’ll implement Retrieval-Augmented Generation (RAG) – a powerful technique that combines information retrieval with language generation to answer questions based on your specific documents.

Understanding RAG Architecture

RAG combines the power of information retrieval with language generation. It allows your AI application to answer questions based on your specific documents rather than just its training data.

Building a RAG Chain

// src/services/ragService.js

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { RunnableSequence, RunnablePassthrough } from "@langchain/core/runnables";

import { StringOutputParser } from "@langchain/core/output_parsers";

import { formatDocumentsAsString } from "langchain/util/document";

import { DocumentManager } from './documentManager.js';

import { llm } from '../config/azure.js';

export class RAGService {

constructor() {

this.documentManager = new DocumentManager();

this.llm = llm;

this.setupRAGChain();

}

setupRAGChain() {

const ragPrompt = ChatPromptTemplate.fromTemplate(`

You are a helpful assistant that answers questions based on the provided context.

Use only the information from the context to answer the question.

If the answer cannot be found in the context, clearly state that you don't have enough information.

Context: {context}

Question: {question}

Answer:

`);

const retriever = async (input) => {

const searchResults = await this.documentManager.searchDocuments(

input.question,

{ maxResults: 5, includeMetadata: true }

);

return searchResults.results.map(result => ({

pageContent: result.content,

metadata: result.metadata,

}));

};

this.ragChain = RunnableSequence.from([

{

context: retriever.pipe(formatDocumentsAsString),

question: new RunnablePassthrough(),

},

ragPrompt,

this.llm,

new StringOutputParser(),

]);

}

async askQuestion(question, options = {}) {

const { includeSourceInfo = true, maxContextLength = 4000 } = options;

try {

const searchResults = await this.documentManager.searchDocuments(question, {

maxResults: 5,

includeMetadata: includeSourceInfo,

});

if (searchResults.totalResults === 0) {

return {

answer: "I don't have any relevant information to answer this question.",

sources: [],

confidence: 'low',

};

}

const answer = await this.ragChain.invoke({ question });

return {

answer,

sources: includeSourceInfo ? this.extractSources(searchResults.results) : undefined,

confidence: this.assessConfidence(searchResults.results),

documentsUsed: searchResults.results.length,

};

} catch (error) {

console.error('RAG query error:', error);

throw new Error('Failed to process question');

}

}

extractSources(results) {

return results.map(result => ({

fileName: result.metadata?.fileName,

relevanceScore: result.relevanceScore,

chunkIndex: result.metadata?.chunkIndex,

}));

}

assessConfidence(results) {

if (results.length === 0) return 'low';

const avgScore = results.reduce((sum, r) => sum + r.relevanceScore, 0) / results.length;

if (avgScore <= 0.3) return 'high';

if (avgScore <= 0.6) return 'medium';

return 'low';

}

}Advanced RAG with Multi-Query

// src/services/advancedRAGService.js

export class AdvancedRAGService extends RAGService {

async multiQueryRAG(originalQuestion, options = {}) {

const { numQueries = 3 } = options;

try {

// Generate multiple variations of the question

const queryVariations = await this.generateQueryVariations(originalQuestion, numQueries);

// Perform searches for each variation

const allResults = [];

for (const query of queryVariations) {

const results = await this.documentManager.searchDocuments(query, {

maxResults: 3,

includeMetadata: true,

});

allResults.push(...results.results);

}

// Deduplicate and rank results

const uniqueResults = this.deduplicateByContent(allResults);

const topResults = uniqueResults.slice(0, 8);

// Generate comprehensive answer

const context = topResults

.map(r => `Source: ${r.metadata?.fileName}\n${r.content}`)

.join('\n\n');

const answer = await this.generateComprehensiveAnswer(context, originalQuestion);

return {

answer,

queryVariations,

sourcesUsed: topResults.length,

confidence: 'high',

};

} catch (error) {

console.error('Multi-query RAG error:', error);

throw new Error('Failed to process multi-query RAG');

}

}

async generateQueryVariations(originalQuestion, numVariations) {

const prompt = `Generate ${numVariations} different ways to ask: "${originalQuestion}"`;

const variations = await this.llm.invoke(prompt);

return [originalQuestion, ...this.parseVariations(variations)];

}

deduplicateByContent(results) {

const seen = new Set();

return results.filter(result => {

const key = result.content.substring(0, 100).toLowerCase();

if (seen.has(key)) return false;

seen.add(key);

return true;

});

}

}RAG API Endpoints

// src/routes/rag.js

import express from 'express';

import { RAGService } from '../services/ragService.js';

import { AdvancedRAGService } from '../services/advancedRAGService.js';

const router = express.Router();

const ragService = new RAGService();

const advancedRAGService = new AdvancedRAGService();

router.post('/ask', async (req, res) => {

try {

const { question, options } = req.body;

if (!question) {

return res.status(400).json({ error: 'Question is required' });

}

const response = await ragService.askQuestion(question, options);

res.json({

success: true,

...response,

});

} catch (error) {

res.status(500).json({ error: error.message });

}

});

router.post('/multi-query', async (req, res) => {

try {

const { question, options } = req.body;

if (!question) {

return res.status(400).json({ error: 'Question is required' });

}

const response = await advancedRAGService.multiQueryRAG(question, options);

res.json({

success: true,

...response,

});

} catch (error) {

res.status(500).json({ error: error.message });

}

});

export default router;Conversational RAG

// Add to RAGService

async conversationalRAG(question, conversationHistory = []) {

try {

// Combine question with recent conversation context

const contextualQuestion = this.buildContextualQuestion(question, conversationHistory);

// Perform RAG

const response = await this.askQuestion(contextualQuestion);

// Update conversation history

conversationHistory.push({

type: 'human',

content: question,

timestamp: new Date().toISOString(),

});

conversationHistory.push({

type: 'assistant',

content: response.answer,

timestamp: new Date().toISOString(),

sources: response.sources,

});

return {

...response,

conversationHistory,

};

} catch (error) {

throw new Error('Failed to process conversational question');

}

}

buildContextualQuestion(question, history) {

if (history.length === 0) return question;

const recentHistory = history.slice(-6);

const contextualPrompt = recentHistory

.map(h => `${h.type}: ${h.content}`)

.join('\n');

return `Previous conversation:\n${contextualPrompt}\n\nCurrent question: ${question}`;

}Testing Your RAG System

curl -X POST http://localhost:3000/api/rag/ask \

-H "Content-Type: application/json" \

-d '{

"question": "What is LangChain and how does it work?",

"options": {

"includeSourceInfo": true,

"maxContextLength": 3000

}

}'RAG Best Practices

- Context Quality: Ensure retrieved context is relevant and well-formatted

- Source Attribution: Always provide source information for transparency

- Confidence Assessment: Implement confidence scoring for answers

- Fallback Handling: Gracefully handle cases with no relevant context

- Context Length: Balance between comprehensive context and token limits

In Part 7, we’ll add advanced features like memory, agents, and custom tools to make our application even more powerful!