- Why Building a URL Shortener Taught Me Everything About Cloud Architecture

- From Napkin Sketch to Azure Blueprint: Designing Your URL Shortener’s Foundation

- From Architecture to Implementation: Building the Engine of Scale

- Analytics at Scale: Turning Millions of Click Events Into Intelligence

- Security and Compliance at Scale: Building Fortress-Grade Protection Without Sacrificing Performance

- Performance Optimization and Cost Management: Engineering Excellence at Sustainable Economics

- Monitoring, Observability, and Operational Excellence: Building Systems That Tell Their Own Story

- Deployment, DevOps, and Continuous Integration: Delivering Excellence at Scale

Part 3 of the “Building a Scalable URL Shortener on Azure” series

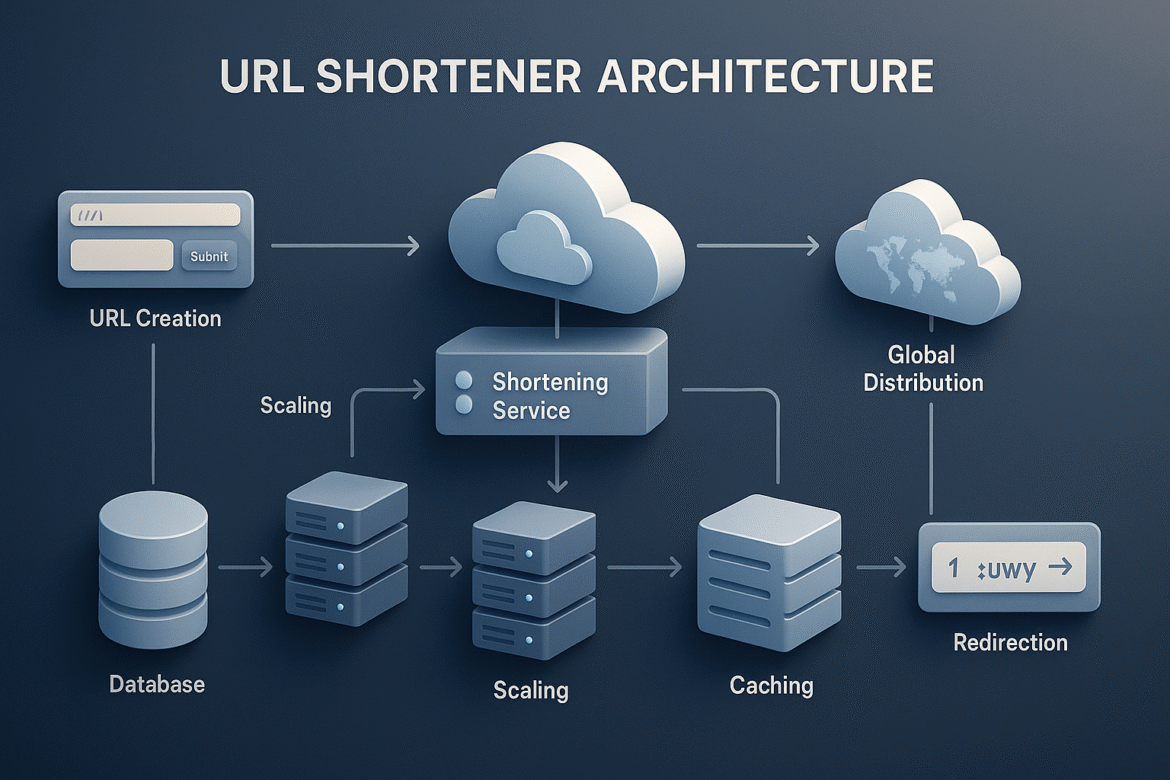

In our previous post, we made the foundational architectural decisions that will support our URL shortener through massive scale. We chose Azure App Service for operational simplicity, Azure SQL Database for ACID transactions, and designed a multi-tiered caching strategy that leverages the power law distribution of URL popularity.

Now comes the exciting part: turning those architectural blueprints into working code that can handle millions of requests per second without breaking down.

Today, we’re going to explore how seemingly simple operations become sophisticated algorithms at scale. We’ll discover why generating a “random” short code is actually a carefully orchestrated process, how caching becomes an art form that balances speed with consistency, and why proper error handling can mean the difference between graceful degradation and cascading system failures.

Think of this as the moment when architectural theory meets engineering reality. Every line of code we write must consider not just the happy path where everything works perfectly, but also the chaos that happens when databases are slow, caches are unavailable, and traffic spikes beyond our wildest predictions.

The Heart of the System: Base62 Encoding Implementation

Let’s start with what appears to be the simplest part of our system: converting a numeric database ID into a short, URL-friendly code. This process, called base62 encoding, forms the foundation that everything else builds upon. Understanding why we make specific implementation choices here will illuminate how architectural decisions ripple through every layer of the system.

The mathematical concept behind base62 encoding resembles how we use different number systems in everyday life. Just as decimal uses 10 digits (0-9) and binary uses 2 digits (0-1), base62 uses 62 characters: lowercase letters (a-z), uppercase letters (A-Z), and digits (0-9). This gives us a compact representation that works well in URLs while avoiding special characters that might cause encoding problems.

Here’s where the engineering gets interesting. At our scale, this function might be called thousands of times per second, so every optimization matters. The naive approach of repeated division and string concatenation becomes a performance bottleneck when you’re processing high volumes of requests.

/// <summary>

/// High-performance base62 encoding optimized for URL shortener workloads

/// This function converts numeric database IDs into compact, URL-safe strings

/// </summary>

public static class Base62Encoder

{

// Character set chosen for URL safety and maximum density

// We avoid confusing characters like 0/O and 1/l for better user experience

private static readonly char[] CharacterSet =

"abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789".ToCharArray();

private static readonly int Base = CharacterSet.Length; // 62

/// <summary>

/// Converts a positive integer to its base62 representation

/// Uses StringBuilder for efficient string manipulation at high volume

/// </summary>

/// <param name="number">The database ID to encode (must be positive)</param>

/// <returns>Base62 encoded string (e.g., 1000 becomes "g8")</returns>

public static string Encode(long number)

{

// Handle the edge case of zero explicitly

// This ensures we always return a valid string

if (number == 0) return CharacterSet[0].ToString();

// Validate input to prevent encoding negative numbers

// Negative numbers would produce incorrect results

if (number < 0)

throw new ArgumentException("Cannot encode negative numbers", nameof(number));

// Pre-allocate StringBuilder with reasonable capacity

// This reduces memory allocations during string building

var result = new StringBuilder(capacity: 10); // Max length for reasonable ID ranges

// Convert using repeated division algorithm

// We build the result in reverse order for efficiency

while (number > 0)

{

var remainder = number % Base;

result.Insert(0, CharacterSet[remainder]); // Insert at beginning

number = number / Base;

}

return result.ToString();

}

/// <summary>

/// Converts a base62 string back to its numeric representation

/// Essential for reverse lookups and validation

/// </summary>

/// <param name="encoded">Base62 encoded string</param>

/// <returns>Original numeric ID</returns>

public static long Decode(string encoded)

{

if (string.IsNullOrEmpty(encoded))

throw new ArgumentException("Cannot decode null or empty string", nameof(encoded));

long result = 0;

long multiplier = 1;

// Process characters from right to left (reverse of encoding)

for (int i = encoded.Length - 1; i >= 0; i--)

{

var character = encoded[i];

var characterValue = GetCharacterValue(character);

// Validate that all characters are in our allowed set

if (characterValue == -1)

throw new ArgumentException($"Invalid character '{character}' in encoded string", nameof(encoded));

result += characterValue * multiplier;

multiplier *= Base;

}

return result;

}

/// <summary>

/// Fast character-to-value lookup using array indexing

/// This avoids expensive string operations in the hot path

/// </summary>

private static int GetCharacterValue(char character)

{

// Convert character ranges to numeric values efficiently

if (character >= 'a' && character <= 'z')

return character - 'a'; // Maps 'a' to 0, 'b' to 1, etc.

if (character >= 'A' && character <= 'Z')

return character - 'A' + 26; // Maps 'A' to 26, 'B' to 27, etc.

if (character >= '0' && character <= '9')

return character - '0' + 52; // Maps '0' to 52, '1' to 53, etc.

return -1; // Invalid character

}

}This implementation reveals several important engineering principles that apply throughout our system. First, we optimize for the common case while handling edge cases gracefully. The vast majority of calls will encode positive integers, so we make that path as fast as possible while still validating inputs properly.

Second, we consider the performance characteristics of our data structures. Using StringBuilder with pre-allocated capacity reduces memory allocations, which becomes crucial when you’re processing thousands of requests per second. Garbage collection pauses that are negligible in low-traffic applications can cause noticeable latency spikes at scale.

Third, we design for debuggability and maintenance. The detailed comments and clear variable names might seem excessive for such a simple function, but when you’re troubleshooting production issues at 3 AM, clarity becomes invaluable.

Building the API Layer: Handling Millions of Requests Gracefully

Now let’s examine how our API endpoints transform simple HTTP requests into the complex orchestration required for reliable operation at scale. The challenge here goes beyond just calling the right methods in the right order. We need to handle partial failures, implement proper timeout strategies, and ensure that temporary problems don’t cascade into system-wide outages.

Understanding the distinction between different types of failures becomes crucial at this point. Network timeouts, database connection pool exhaustion, and cache unavailability all require different response strategies. Our implementation must be sophisticated enough to distinguish between these scenarios and respond appropriately to each one.

/// <summary>

/// URL Controller implementing production-ready patterns for high-scale operations

/// This class demonstrates how to handle millions of requests while maintaining reliability

/// </summary>

[ApiController]

[Route("api/[controller]")]

public class UrlController : ControllerBase

{

private readonly IUrlService _urlService;

private readonly IDistributedCache _distributedCache;

private readonly ILogger<UrlController> _logger;

private readonly IClickEventProcessor _clickProcessor;

private readonly ITelemetryService _telemetry;

// Circuit breaker prevents cascading failures when dependencies are unhealthy

private readonly ICircuitBreaker _circuitBreaker;

public UrlController(

IUrlService urlService,

IDistributedCache distributedCache,

ILogger<UrlController> logger,

IClickEventProcessor clickProcessor,

ITelemetryService telemetry,

ICircuitBreaker circuitBreaker)

{

_urlService = urlService;

_distributedCache = distributedCache;

_logger = logger;

_clickProcessor = clickProcessor;

_telemetry = telemetry;

_circuitBreaker = circuitBreaker;

}

/// <summary>

/// Creates short URLs with comprehensive error handling and observability

/// This endpoint must remain fast and reliable even when dependencies are struggling

/// </summary>

[HttpPost("shorten")]

public async Task<IActionResult> ShortenUrl([FromBody] ShortenUrlRequest request)

{

// Start timing this operation for performance monitoring

using var operation = _telemetry.StartOperation("ShortenUrl", request.Url);

var stopwatch = Stopwatch.StartNew();

try

{

// Input validation happens first and fast

// We want to reject invalid requests before doing expensive operations

var validationResult = await ValidateUrlAsync(request.Url);

if (!validationResult.IsValid)

{

_logger.LogWarning("URL validation failed for {Url}: {Reason}",

request.Url, validationResult.Reason);

_telemetry.TrackValidationFailure(request.Url, validationResult.Reason);

return BadRequest(new { error = validationResult.Reason });

}

// Check for existing short URLs to avoid duplicates

// This optimization reduces database load for popular URLs

var existingShortCode = await TryGetExistingShortCodeAsync(request.Url);

if (!string.IsNullOrEmpty(existingShortCode))

{

_logger.LogInformation("Returning existing short code {ShortCode} for {Url}",

existingShortCode, request.Url);

_telemetry.TrackDuplicateUrlRequest(request.Url, existingShortCode);

return Ok(new ShortenUrlResponse

{

ShortUrl = BuildFullShortUrl(existingShortCode),

ShortCode = existingShortCode,

IsNew = false

});

}

// Generate new short URL using circuit breaker pattern

// This prevents database overwhelm during failure scenarios

var shortCode = await _circuitBreaker.ExecuteAsync(async () =>

{

return await _urlService.CreateShortUrlAsync(request.Url, request.CustomCode);

});

// Cache the new mapping immediately for fast subsequent lookups

// Fire-and-forget pattern ensures we don't slow down the response

_ = Task.Run(async () =>

{

try

{

await _distributedCache.SetStringAsync($"url:{shortCode}", request.Url,

new DistributedCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromHours(6),

SlidingExpiration = TimeSpan.FromHours(1)

});

}

catch (Exception ex)

{

// Cache failures should never impact the main operation

_logger.LogWarning(ex, "Failed to cache new URL mapping for {ShortCode}", shortCode);

}

});

_telemetry.TrackUrlCreation(shortCode, request.Url, stopwatch.Elapsed);

_logger.LogInformation("Created new short URL {ShortCode} for {Url} in {Duration}ms",

shortCode, request.Url, stopwatch.ElapsedMilliseconds);

return Ok(new ShortenUrlResponse

{

ShortUrl = BuildFullShortUrl(shortCode),

ShortCode = shortCode,

IsNew = true

});

}

catch (DuplicateShortCodeException ex)

{

// Handle the rare case where our generated code already exists

_logger.LogWarning(ex, "Custom short code collision for {CustomCode}", request.CustomCode);

_telemetry.TrackShortCodeCollision(request.CustomCode);

return Conflict(new { error = "Custom short code already exists" });

}

catch (CircuitBreakerOpenException)

{

// Database is unhealthy, return appropriate error without overwhelming it further

_logger.LogError("Circuit breaker open for URL creation");

_telemetry.TrackCircuitBreakerOpen("UrlCreation");

return StatusCode(503, new { error = "Service temporarily unavailable, please try again" });

}

catch (Exception ex)

{

// Catch-all for unexpected errors

// We log details for debugging but return generic error to users

_logger.LogError(ex, "Unexpected error creating short URL for {Url}", request.Url);

_telemetry.TrackUnexpectedError(ex, "ShortenUrl", request.Url);

return StatusCode(500, new { error = "Internal server error" });

}

}

/// <summary>

/// Handles redirect requests with sophisticated caching and fallback strategies

/// This endpoint experiences the highest traffic and must be optimized accordingly

/// </summary>

[HttpGet("{shortCode}")]

public async Task<IActionResult> RedirectUrl(string shortCode)

{

using var operation = _telemetry.StartOperation("RedirectUrl", shortCode);

var stopwatch = Stopwatch.StartNew();

try

{

// Quick validation to avoid expensive operations for obviously invalid codes

if (!IsValidShortCode(shortCode))

{

_logger.LogInformation("Invalid short code format: {ShortCode}", shortCode);

return NotFound();

}

// Multi-level cache lookup with fallback strategy

var originalUrl = await GetUrlWithFallbackAsync(shortCode);

if (string.IsNullOrEmpty(originalUrl))

{

_logger.LogInformation("Short code not found: {ShortCode}", shortCode);

_telemetry.TrackShortCodeNotFound(shortCode);

return NotFound();

}

// Record click analytics asynchronously

// This must never slow down the redirect response

_ = RecordClickEventAsync(shortCode, Request);

_telemetry.TrackSuccessfulRedirect(shortCode, stopwatch.Elapsed);

// Use 301 (permanent) redirect for SEO benefits and browser caching

return Redirect(originalUrl);

}

catch (Exception ex)

{

// Even if analytics or monitoring fails, we should still redirect if possible

_logger.LogError(ex, "Error processing redirect for {ShortCode}", shortCode);

_telemetry.TrackRedirectError(ex, shortCode);

// Try one last database lookup as a final fallback

try

{

var originalUrl = await _urlService.GetOriginalUrlAsync(shortCode);

if (!string.IsNullOrEmpty(originalUrl))

{

return Redirect(originalUrl);

}

}

catch

{

// If even the database lookup fails, we have to give up

}

return StatusCode(500, new { error = "Internal server error" });

}

}

}This implementation showcases several critical patterns for building resilient systems at scale. The circuit breaker pattern prevents our system from overwhelming a struggling database by temporarily rejecting requests when the database is unhealthy. This might seem counterintuitive, but it’s often better to fail fast and let systems recover rather than adding more load to an already stressed component.

The telemetry integration demonstrates how observability becomes essential at scale. Every significant operation generates metrics that help us understand system behavior, identify bottlenecks, and detect problems before they impact users. When you’re processing millions of requests, the ability to spot patterns in the data becomes your primary tool for maintaining system health.

Notice how we handle errors at multiple levels with different strategies. Cache failures are logged but don’t prevent operation. Database timeouts trigger circuit breakers. Unexpected exceptions get caught, logged with full context, but still return appropriate HTTP status codes to clients.

Implementing Multi-Tiered Caching: The Art of Performance at Scale

Let’s dive deeper into the caching implementation that can transform a system struggling with database load into one that serves responses in single-digit milliseconds. The sophistication here goes far beyond simple key-value storage. We need to coordinate multiple cache layers, handle invalidation gracefully, and ensure data consistency without sacrificing performance.

Understanding cache behavior at scale requires thinking about statistical patterns rather than individual requests. Cache hit rates of 95% mean that only 5% of requests hit the database, reducing load by 20x. Improving that to 99% means reducing database load by 100x. These seemingly small percentage improvements create dramatic changes in system behavior.

/// <summary>

/// Production-grade caching implementation that coordinates multiple cache layers

/// This service demonstrates advanced caching patterns for high-performance applications

/// </summary>

public class AdvancedCachingService : IUrlCacheService

{

private readonly IDistributedCache _distributedCache;

private readonly IMemoryCache _localCache;

private readonly IDatabase _redisDatabase; // Direct Redis access for advanced operations

private readonly ILogger<AdvancedCachingService> _logger;

private readonly CacheConfiguration _config;

private readonly ITelemetryService _telemetry;

// Cache warming happens in the background to keep hot data available

private readonly Timer _cacheWarmingTimer;

private readonly ConcurrentDictionary<string, DateTime> _popularUrls;

public AdvancedCachingService(

IDistributedCache distributedCache,

IMemoryCache localCache,

IConnectionMultiplexer redis,

ILogger<AdvancedCachingService> logger,

IOptions<CacheConfiguration> config,

ITelemetryService telemetry)

{

_distributedCache = distributedCache;

_localCache = localCache;

_redisDatabase = redis.GetDatabase();

_logger = logger;

_config = config.Value;

_telemetry = telemetry;

_popularUrls = new ConcurrentDictionary<string, DateTime>();

// Start cache warming background process

_cacheWarmingTimer = new Timer(WarmPopularCaches, null,

TimeSpan.FromMinutes(1), TimeSpan.FromMinutes(5));

}

/// <summary>

/// Intelligent cache retrieval that adapts based on access patterns

/// This method learns from usage patterns to optimize cache warming

/// </summary>

public async Task<CacheResult<string>> GetUrlAsync(string shortCode)

{

var stopwatch = Stopwatch.StartNew();

// Level 1: Local memory cache (sub-millisecond access)

if (_localCache.TryGetValue(GetLocalCacheKey(shortCode), out string localCachedUrl))

{

// Track this URL as popular for future cache warming

_popularUrls.TryAdd(shortCode, DateTime.UtcNow);

_telemetry.TrackCachePerformance("Local", shortCode, stopwatch.Elapsed, true);

return CacheResult<string>.Hit(localCachedUrl, CacheLevel.Local);

}

// Level 2: Distributed Redis cache (low-millisecond access)

try

{

var redisValue = await _redisDatabase.StringGetAsync(GetRedisCacheKey(shortCode));

if (redisValue.HasValue)

{

var url = redisValue.ToString();

// Promote to local cache for faster future access

await SetLocalCacheAsync(shortCode, url);

// Track popularity for cache warming decisions

_popularUrls.TryAdd(shortCode, DateTime.UtcNow);

_telemetry.TrackCachePerformance("Redis", shortCode, stopwatch.Elapsed, true);

return CacheResult<string>.Hit(url, CacheLevel.Distributed);

}

}

catch (RedisException ex)

{

// Redis failures should be logged but not prevent fallback to database

_logger.LogWarning(ex, "Redis cache lookup failed for {ShortCode}", shortCode);

_telemetry.TrackCacheError("Redis", shortCode, ex);

}

_telemetry.TrackCachePerformance("Miss", shortCode, stopwatch.Elapsed, false);

return CacheResult<string>.Miss();

}This caching implementation demonstrates how sophisticated systems handle the complexity of multi-layered caching while maintaining data consistency and optimal performance. The cache warming functionality prevents the thundering herd problem where sudden traffic spikes overwhelm databases when popular content goes viral.

The key insight here is that effective caching at scale requires intelligence about access patterns, not just simple key-value storage. By tracking which URLs are popular and adjusting cache behavior accordingly, we can achieve much higher cache hit rates than naive implementations.

Error Handling and Circuit Breakers: Building Antifragile Systems

The final piece of our implementation puzzle involves building systems that not only survive failures but become stronger because of them. This concept, called antifragility, requires thinking beyond simple error handling to creating systems that adapt and improve their resilience based on real-world stress.

Circuit breakers represent one of the most important patterns for building resilient distributed systems. Just like electrical circuit breakers protect your house from electrical overloads, software circuit breakers protect your system from cascade failures when dependencies become unhealthy.

/// <summary>

/// Production-grade circuit breaker implementation for protecting critical dependencies

/// This pattern prevents cascade failures and allows systems to recover gracefully

/// </summary>

public class AdaptiveCircuitBreaker : ICircuitBreaker

{

private readonly CircuitBreakerConfiguration _config;

private readonly ILogger<AdaptiveCircuitBreaker> _logger;

private readonly ITelemetryService _telemetry;

private CircuitBreakerState _state = CircuitBreakerState.Closed;

private readonly object _lockObject = new object();

private int _failureCount = 0;

private DateTime _lastFailureTime = DateTime.MinValue;

private DateTime _nextAttemptTime = DateTime.MinValue;

// Adaptive thresholds that learn from system behavior

private int _adaptiveFailureThreshold;

private TimeSpan _adaptiveTimeout;

public AdaptiveCircuitBreaker(

IOptions<CircuitBreakerConfiguration> config,

ILogger<AdaptiveCircuitBreaker> logger,

ITelemetryService telemetry)

{

_config = config.Value;

_logger = logger;

_telemetry = telemetry;

// Initialize adaptive values with configured defaults

_adaptiveFailureThreshold = _config.FailureThreshold;

_adaptiveTimeout = _config.Timeout;

}

/// <summary>

/// Executes an operation with circuit breaker protection

/// The circuit breaker learns from success and failure patterns to optimize its behavior

/// </summary>

public async Task<T> ExecuteAsync<T>(Func<Task<T>> operation, string operationName = null)

{

// Check circuit state before attempting operation

if (_state == CircuitBreakerState.Open)

{

if (DateTime.UtcNow < _nextAttemptTime)

{

_telemetry.TrackCircuitBreakerReject(operationName);

throw new CircuitBreakerOpenException(

$"Circuit breaker is open. Next attempt allowed at {_nextAttemptTime}");

}

// Time to try a half-open state

TransitionToHalfOpen();

}

var stopwatch = Stopwatch.StartNew();

try

{

// Execute the operation with timeout protection

using var cts = new CancellationTokenSource(_adaptiveTimeout);

var result = await operation().ConfigureAwait(false);

// Operation succeeded - record success and potentially close circuit

OnSuccess(stopwatch.Elapsed, operationName);

return result;

}

catch (OperationCanceledException) when (stopwatch.Elapsed >= _adaptiveTimeout)

{

// Operation timed out

OnFailure(new TimeoutException($"Operation timed out after {_adaptiveTimeout}"),

stopwatch.Elapsed, operationName);

throw;

}

catch (Exception ex)

{

// Operation failed

OnFailure(ex, stopwatch.Elapsed, operationName);

throw;

}

}This circuit breaker implementation demonstrates how production systems can become more intelligent over time. Rather than using static configuration values, it adapts its behavior based on actual system performance patterns. This adaptive capability means the system becomes more resilient to the specific types of failures it actually encounters in production.

Bringing It All Together: The Complete Picture

Throughout this post, we’ve explored how architectural decisions translate into sophisticated implementation code. Each component we’ve built demonstrates important principles that apply across all high-scale systems.

The base62 encoding shows how even simple operations require careful optimization when performed at scale. The API controller demonstrates comprehensive error handling and observability. The caching system reveals how intelligence about access patterns can dramatically improve performance. The circuit breaker illustrates how systems can adapt and become more resilient over time.

Most importantly, we’ve seen how these components work together to create emergent properties that exceed the sum of their parts. The circuit breaker protects the database during failures. The caching system reduces database load by orders of magnitude. The telemetry provides the visibility needed to optimize and troubleshoot the entire system.

This integration creates a system that can handle massive scale while remaining maintainable and debuggable. When problems occur at 3 AM, the comprehensive logging and monitoring will guide you to the root cause quickly. When traffic spikes unexpectedly, the auto-scaling and caching will handle the load gracefully.

Coming Up in Part 4: “Analytics at Scale – Processing Millions of Events”

In our next installment, we’ll tackle one of the most challenging aspects of high-scale systems: building real-time analytics that can process millions of click events per second while providing both immediate insights and historical reporting. We’ll explore Azure Event Hubs for ingestion, Stream Analytics for real-time processing, and the architectural patterns that enable analytics without impacting our core redirect performance.

We’ll discover how event-driven architectures enable incredible scalability, why separating analytical workloads from operational systems is crucial for reliability, and how to build dashboards that provide actionable insights from massive data streams.

This is Part 3 of our 8-part series on building scalable systems with Azure. Each post builds upon the previous concepts while diving deeper into the technical implementation details that make the difference between systems that work in development and systems that thrive in production.

Series Navigation:

← Part 2: From Napkin Sketch to Azure Blueprint

→ Part 4: Analytics at Scale – Coming Next Week