- Why Building a URL Shortener Taught Me Everything About Cloud Architecture

- From Napkin Sketch to Azure Blueprint: Designing Your URL Shortener’s Foundation

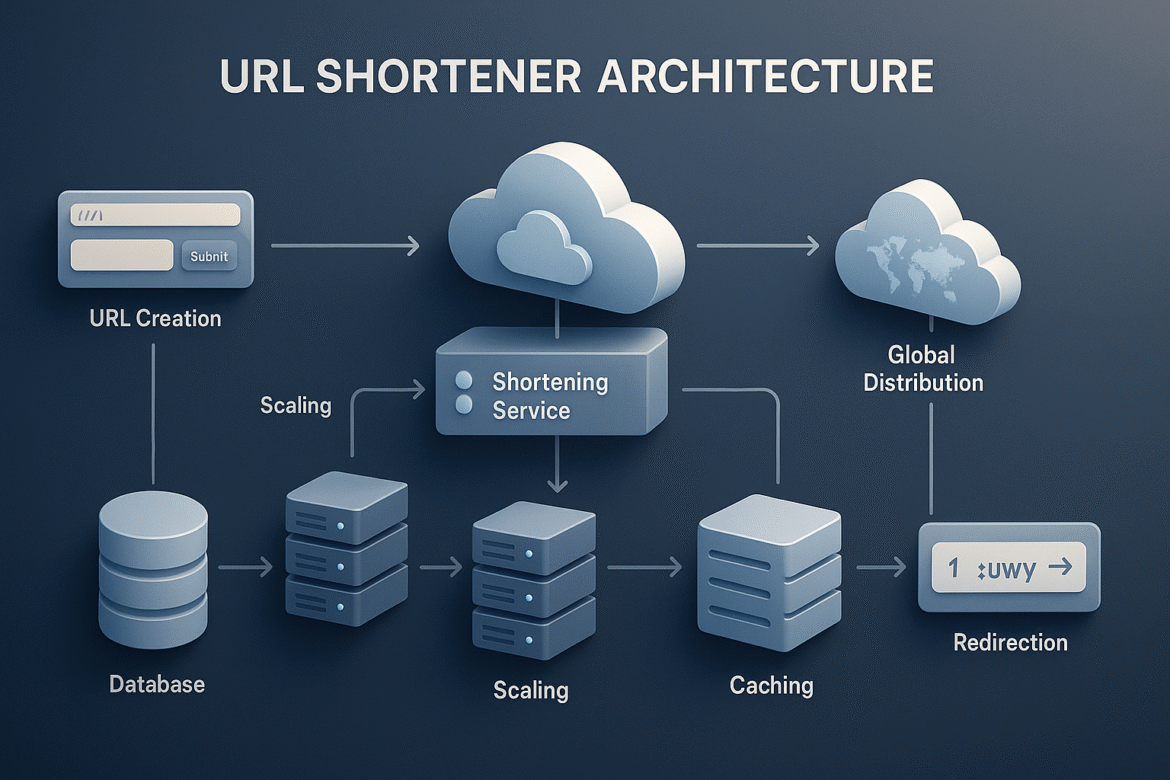

- From Architecture to Implementation: Building the Engine of Scale

- Analytics at Scale: Turning Millions of Click Events Into Intelligence

- Security and Compliance at Scale: Building Fortress-Grade Protection Without Sacrificing Performance

- Performance Optimization and Cost Management: Engineering Excellence at Sustainable Economics

- Monitoring, Observability, and Operational Excellence: Building Systems That Tell Their Own Story

- Deployment, DevOps, and Continuous Integration: Delivering Excellence at Scale

Part 6 of the “Building a Scalable URL Shortener on Azure” series

In our previous post, we built fortress-grade security that protects against sophisticated threats while maintaining the blazing-fast performance characteristics our users expect. We explored behavioral threat detection, integrated Azure security services, and compliance frameworks that provide comprehensive protection without becoming bottlenecks.

Now we face one of the most challenging balancing acts in engineering: optimizing system performance while managing operational costs effectively. This challenge becomes exponentially more complex at scale, where small inefficiencies can compound into massive cost overruns, and performance optimizations can either save or waste hundreds of thousands of dollars annually.

Today, we’re going to explore how performance optimization and cost management are not opposing forces but complementary strategies that reinforce each other when properly implemented. Think of this as learning to build a Formula 1 race car that not only wins races but also operates within a sustainable budget – every component must be precisely engineered for maximum effectiveness per dollar spent.

The insights we’ll develop teach fundamental principles that apply to any high-scale system where operational efficiency determines long-term viability. The techniques we’ll explore today power everything from startup applications operating on tight budgets to enterprise systems serving millions of users while maintaining strict cost controls.

Understanding this balance requires shifting your perspective from thinking about performance and cost as separate concerns to viewing them as interconnected aspects of system design that must be optimized together. The architectural patterns we’ll implement create systems that automatically adapt their resource consumption to match actual demand while maintaining exceptional user experiences.

The Performance-Cost Paradigm: Understanding the True Economics of Scale

Before diving into Azure’s cost management tools and optimization techniques, we need to establish why performance and cost optimization at scale requires fundamentally different thinking than traditional approaches. The key insight that transforms how you approach system economics is recognizing that performance improvements often reduce costs, while cost optimizations frequently improve performance when done correctly.

Traditional thinking often treats performance and cost as a zero-sum game – you can have better performance if you’re willing to pay more, or you can save money by accepting worse performance. This mental model leads to suboptimal decisions that miss the sophisticated optimizations available in modern cloud architectures.

The reality at scale is far more nuanced and interesting. Consider how intelligent caching reduces both response times and database costs simultaneously. A well-designed cache layer might improve response times from 200ms to 5ms while reducing database queries by 95%, dramatically lowering both infrastructure costs and improving user experience. This creates a virtuous cycle where better performance actually costs less to operate.

Understanding this paradigm shift requires examining the total cost of ownership rather than just infrastructure costs. Poor performance leads to user abandonment, which increases customer acquisition costs. Unreliable systems require more operational staff and incident response. Inefficient architectures consume more resources while delivering worse experiences. When viewed holistically, performance optimization becomes one of the most effective cost reduction strategies available.

Consider what happens when our URL shortener experiences a traffic spike that overwhelms our current capacity. Traditional approaches might involve immediately scaling up expensive resources, potentially overprovisioning for the peak demand. Our approach will instead implement intelligent scaling strategies that automatically optimize for both performance and cost, scaling resources precisely to match demand while minimizing waste.

The sophistication lies in understanding the temporal patterns of resource utilization, the relationship between different scaling strategies and their cost implications, and the trade-offs between various performance optimization techniques. This knowledge enables us to build systems that automatically find the optimal balance point between performance and cost for any given workload.

Azure Cost Management: Building Financial Intelligence Into Your Architecture

Azure provides a comprehensive cost management ecosystem that enables us to implement sophisticated optimization strategies while maintaining complete visibility into spending patterns. The elegance of Azure’s approach lies in how cost management integrates directly with performance monitoring, creating unified dashboards that help us optimize both aspects simultaneously.

Understanding effective cost management requires thinking about cost allocation, budget controls, and optimization recommendations as integral parts of your architecture rather than external administrative processes. The most effective cost optimization happens when your system automatically makes intelligent resource decisions based on real-time cost and performance data.

The foundation of our cost management strategy rests on Azure Cost Management + Billing, which provides detailed cost analysis, budget alerts, and optimization recommendations. But we’ll extend this foundation with custom monitoring and automation that creates a cost-aware system capable of making intelligent trade-offs automatically.

/// <summary>

/// Intelligent cost optimization service that balances performance and expenses

/// This implementation demonstrates how to build cost-aware systems that automatically

/// optimize resource utilization based on real-time performance and cost data

/// </summary>

public class IntelligentCostOptimizationService : ICostOptimizationService

{

private readonly IAzureCostManagementService _costManagement;

private readonly IPerformanceMonitoringService _performanceMonitoring;

private readonly IResourceManagementService _resourceManagement;

private readonly ILogger<IntelligentCostOptimizationService> _logger;

private readonly CostOptimizationConfiguration _config;

// Real-time cost and performance tracking

private readonly ConcurrentDictionary<string, ResourceMetrics> _resourceMetrics;

private readonly ConcurrentDictionary<string, CostTrend> _costTrends;

// Optimization decision engine

private readonly Timer _optimizationTimer;

private readonly SemaphoreSlim _optimizationLock;

public IntelligentCostOptimizationService(

IAzureCostManagementService costManagement,

IPerformanceMonitoringService performanceMonitoring,

IResourceManagementService resourceManagement,

ILogger<IntelligentCostOptimizationService> logger,

IOptions<CostOptimizationConfiguration> config)

{

_costManagement = costManagement;

_performanceMonitoring = performanceMonitoring;

_resourceManagement = resourceManagement;

_logger = logger;

_config = config.Value;

_resourceMetrics = new ConcurrentDictionary<string, ResourceMetrics>();

_costTrends = new ConcurrentDictionary<string, CostTrend>();

_optimizationLock = new SemaphoreSlim(1, 1);

// Run optimization analysis periodically

_optimizationTimer = new Timer(PerformOptimizationAnalysis, null,

TimeSpan.FromMinutes(_config.OptimizationIntervalMinutes),

TimeSpan.FromMinutes(_config.OptimizationIntervalMinutes));

}

/// <summary>

/// Performs comprehensive cost-performance analysis and implements optimizations

/// This method demonstrates how to balance multiple competing objectives automatically

/// </summary>

public async Task<OptimizationResult> PerformComprehensiveOptimizationAsync()

{

await _optimizationLock.WaitAsync();

var analysisStartTime = DateTimeOffset.UtcNow;

try

{

// Gather current cost and performance data

var costAnalysis = await AnalyzeCurrentCostsAsync();

var performanceAnalysis = await AnalyzeCurrentPerformanceAsync();

var resourceUtilization = await AnalyzeResourceUtilizationAsync();

// Identify optimization opportunities

var optimizationOpportunities = await IdentifyOptimizationOpportunitiesAsync(

costAnalysis, performanceAnalysis, resourceUtilization);

// Evaluate and prioritize optimizations

var prioritizedOptimizations = PrioritizeOptimizations(optimizationOpportunities);

// Implement safe optimizations automatically

var implementationResults = await ImplementAutomaticOptimizationsAsync(prioritizedOptimizations);

// Generate recommendations for manual review

var manualRecommendations = GenerateManualRecommendations(

prioritizedOptimizations, implementationResults);

// Update cost and performance baselines

await UpdatePerformanceBaselinesAsync(performanceAnalysis);

await UpdateCostBaselinesAsync(costAnalysis);

var result = new OptimizationResult

{

AnalysisTimestamp = analysisStartTime,

CurrentMonthlyCost = costAnalysis.ProjectedMonthlyCost,

PotentialMonthlySavings = optimizationOpportunities.Sum(o => o.EstimatedMonthlySavings),

ImplementedOptimizations = implementationResults.Where(r => r.IsSuccessful).ToList(),

ManualRecommendations = manualRecommendations,

PerformanceImpact = CalculatePerformanceImpact(implementationResults),

NextAnalysisScheduled = analysisStartTime.AddMinutes(_config.OptimizationIntervalMinutes)

};

_logger.LogInformation("Optimization analysis completed: ${CurrentCost}/month, " +

"${PotentialSavings}/month potential savings, {ImplementedCount} optimizations implemented",

result.CurrentMonthlyCost, result.PotentialMonthlySavings,

result.ImplementedOptimizations.Count);

return result;

}

catch (Exception ex)

{

_logger.LogError(ex, "Cost optimization analysis failed");

throw;

}

finally

{

_optimizationLock.Release();

}

}This cost optimization service demonstrates how to build intelligent systems that automatically balance performance and cost considerations. The key insight here is that effective cost optimization requires continuous monitoring and automated decision-making rather than periodic manual reviews. The system learns from implementation results and refines its optimization strategies over time.

Performance Optimization: Engineering Speed at Scale

While cost optimization focuses on resource efficiency, performance optimization ensures that our system delivers exceptional user experiences regardless of scale. The sophistication lies in understanding how different optimization techniques interact with each other and with cost considerations to create systems that are both fast and economical.

Understanding performance optimization at scale requires thinking about bottlenecks as dynamic, interconnected systems rather than isolated problems. A database optimization might reduce query times but increase memory usage. A caching improvement might speed up read operations while adding complexity to write operations. Our approach must consider these interactions and optimize for overall system performance rather than individual component metrics.

The foundation of effective performance optimization lies in comprehensive observability that reveals not just what is slow, but why it’s slow and what the downstream effects of optimization will be. This requires building performance monitoring systems that can correlate metrics across multiple layers of the architecture while providing actionable insights for optimization decisions.

/// <summary>

/// Analyzes database performance patterns to identify optimization opportunities

/// This includes query optimization, index recommendations, and scaling suggestions

/// </summary>

private async Task<DatabaseBottleneckAnalysis> AnalyzeDatabaseBottlenecksAsync(

DatabaseMetrics databaseMetrics)

{

// Analyze query performance patterns

var slowQueries = databaseMetrics.Queries

.Where(q => q.AverageResponseTime > _config.SlowQueryThresholdMs)

.OrderByDescending(q => q.TotalExecutionTime)

.Take(20)

.ToList();

// Analyze index effectiveness

var indexAnalysis = await AnalyzeIndexEffectivenessAsync(databaseMetrics.IndexUsage);

// Analyze connection pool utilization

var connectionPoolAnalysis = AnalyzeConnectionPoolUtilization(databaseMetrics.ConnectionPool);

// Analyze resource utilization patterns

var resourceUtilizationAnalysis = AnalyzeDatabaseResourceUtilization(databaseMetrics.ResourceUtilization);

// Identify specific optimization opportunities

var optimizationOpportunities = new List<DatabaseOptimizationOpportunity>();

// Query optimization opportunities

foreach (var slowQuery in slowQueries)

{

var queryOptimizations = await AnalyzeQueryOptimizationOpportunitiesAsync(slowQuery);

optimizationOpportunities.AddRange(queryOptimizations);

}

// Index optimization opportunities

if (indexAnalysis.HasUnusedIndexes)

{

optimizationOpportunities.Add(new DatabaseOptimizationOpportunity

{

Type = OptimizationType.RemoveUnusedIndexes,

Description = $"Remove {indexAnalysis.UnusedIndexes.Count} unused indexes",

EstimatedImpact = EstimateIndexRemovalImpact(indexAnalysis.UnusedIndexes),

ImplementationComplexity = ComplexityLevel.Low,

RiskLevel = RiskLevel.Low

});

}

return new DatabaseBottleneckAnalysis

{

SlowQueries = slowQueries,

IndexAnalysis = indexAnalysis,

ConnectionPoolAnalysis = connectionPoolAnalysis,

ResourceUtilizationAnalysis = resourceUtilizationAnalysis,

OptimizationOpportunities = optimizationOpportunities,

OverallSeverity = CalculateDatabaseBottleneckSeverity(slowQueries, resourceUtilizationAnalysis)

};

}This performance optimization implementation demonstrates how to build systems that continuously improve their own performance while maintaining cost efficiency. The machine learning integration enables predictive optimization that addresses performance issues before they impact users, while the comprehensive bottleneck analysis ensures that optimizations target the most impactful areas of the system.

Intelligent Auto-Scaling: Adapting to Demand in Real-Time

One of the most powerful applications of combining performance and cost optimization lies in building intelligent auto-scaling systems that automatically adjust resource allocation based on both current demand and predicted future needs. This approach goes far beyond simple CPU-based scaling to consider complex factors like user behavior patterns, seasonal trends, and cost optimization opportunities.

The key insight that transforms auto-scaling from reactive to predictive is understanding that traffic patterns are rarely random. Viral content follows predictable curves. Geographic usage patterns correlate with time zones. Business applications have weekly and monthly cycles. By building systems that learn these patterns, we can scale proactively rather than reactively, ensuring optimal performance while minimizing costs.

Our intelligent auto-scaling approach considers multiple dimensions simultaneously: current system performance, predicted traffic patterns, cost implications of different scaling strategies, and the time required for scaling operations to take effect. This multi-dimensional optimization enables us to make scaling decisions that balance immediate performance needs with long-term cost efficiency.

The Integration: Where Performance and Cost Optimization Converge

Throughout this exploration of performance and cost optimization, we’ve seen how these two concerns are not opposing forces but complementary aspects of system design that reinforce each other when properly implemented. The sophisticated monitoring, analysis, and optimization systems we’ve built create a foundation for sustainable, high-performance systems that automatically adapt to changing demands while maintaining operational efficiency.

The integration between cost management and performance optimization creates emergent properties that exceed what either approach could achieve alone. Performance improvements often reduce resource consumption and costs. Cost optimizations frequently improve system efficiency and response times. This symbiotic relationship enables us to build systems that become more efficient and cost-effective over time.

Most importantly, this approach scales naturally with system growth. As traffic increases, the optimization systems learn from new patterns and adapt their strategies accordingly. The predictive analytics become more accurate with more data. The automated optimization decisions become more sophisticated as they encounter more scenarios.

Consider how our URL shortener now operates as a self-optimizing system: cache hit rates automatically improve through intelligent warming strategies, database performance continuously optimizes through automated index management, resource allocation adapts to traffic patterns through predictive scaling, and cost efficiency improves through automated resource right-sizing. This creates a system that not only handles massive scale but becomes more efficient as it grows.

Coming Up in Part 7: “Monitoring, Observability, and Operational Excellence”

In our next installment, we’ll explore how to build comprehensive observability systems that provide the visibility needed to operate high-scale systems effectively. We’ll dive into Azure Monitor, implement sophisticated alerting strategies, and design operational runbooks that enable teams to respond quickly and effectively to any system conditions.

We’ll discover how observability is not just about collecting metrics, but about transforming raw data into actionable insights that enable proactive system management and continuous improvement. The monitoring strategies we’ll implement provide the foundation for everything we’ve built so far – from security threat detection to performance optimization to cost management.

This is Part 6 of our 8-part series on building scalable systems with Azure. Each post builds upon previous concepts while exploring the sophisticated optimization techniques that enable systems to deliver exceptional performance at sustainable costs.

Series Navigation:

← Part 5: Security and Compliance at Scale

→ Part 7: Monitoring, Observability, and Operational Excellence – Coming Next Week

One thought on “Performance Optimization and Cost Management: Engineering Excellence at Sustainable Economics”

Comments are closed.